今日推荐开源项目:《更快捷 PowerToys》

今日推荐英文原文:《Three Easy Ways to Improve Web Performance》

今日推荐开源项目:《更快捷 PowerToys》传送门:GitHub链接

推荐理由:即将在 Window 上推出的功能增强程序,帮助用户更快捷的使用这个系统。简单的说就是加入了诸如 Windows 键弹出快捷方式一览这样能够简化操作的功能,第一个预览版将在今年夏天发布,可以期待一下加入快捷操作之后能够为日常工作带来的便利了。

今日推荐英文原文:《Three Easy Ways to Improve Web Performance》作者:Rachel Lum

原文链接:https://medium.com/@lumrachele/three-easy-ways-to-improve-web-performance-f9ca7e5caf32

推荐理由:如何在 Web 页面的加载上提高用户体验

Three Easy Ways to Improve Web Performance

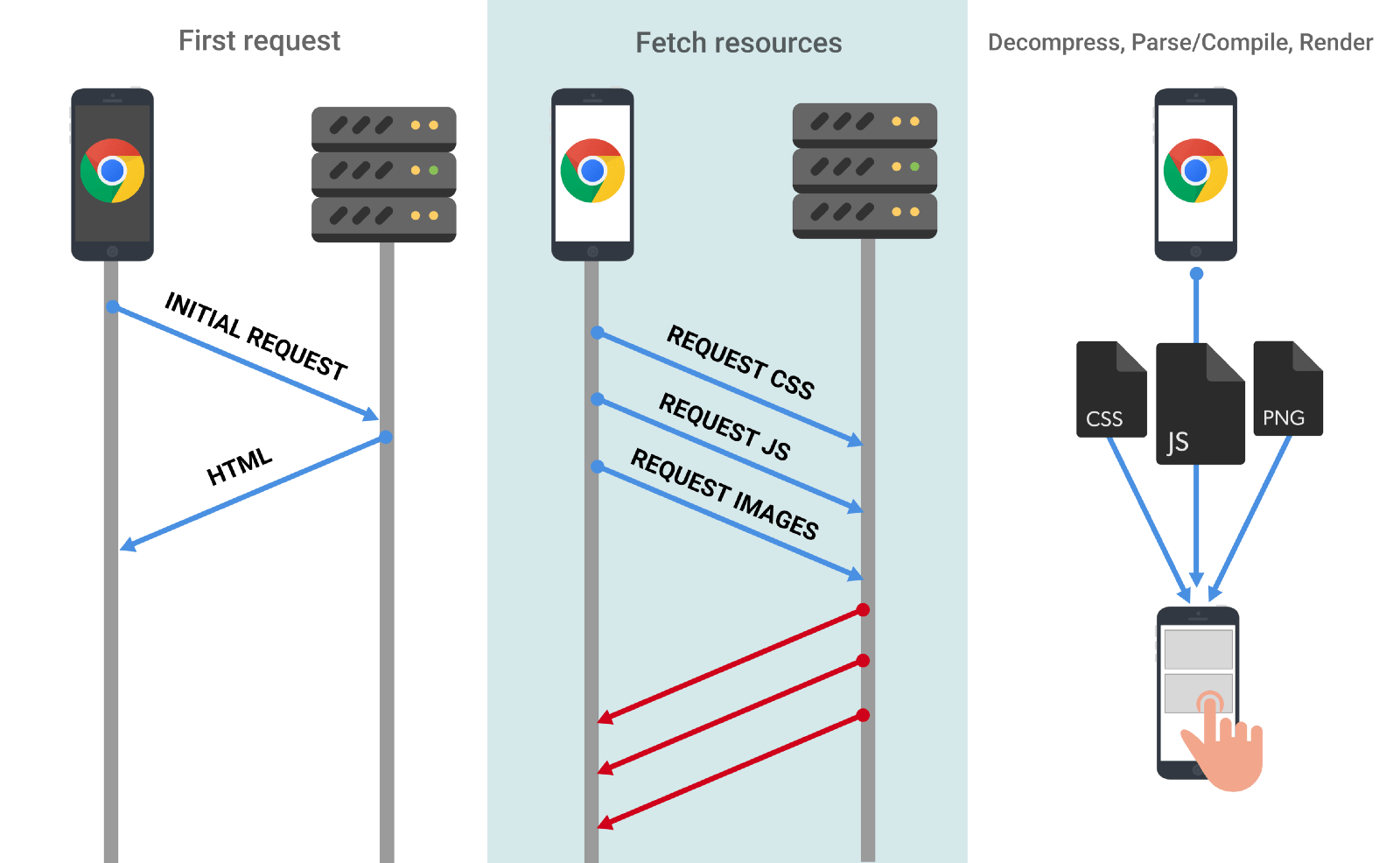

JavaScript Download and Execution Cost

I attended a meetup a couple of weeks ago and learned about some of the incredible Google Dev Tools available for use via Google Chrome extensions, the command line, or Node modules. So far I have used Google Lighthouse, which runs audits on a web page and generates a report in performance, accessibility, PWA, and more. It also provides specific details as to what factors played into a particular score, as well as clear suggestions with documentation for how the score could be improved.

Prior to one of my interviews, I ran Google Lighthouse on the company’s website to check out their performance (I heard that doing this has the potential to score you extra brownie points). Turns out they scored a 27 out of a possible 100 in website performance, which brings them into the red zone. Big OOF!

The main culprits:

- Main-thread work

- JavaScript execution time

- Enormous network payloads

“JavaScript gets parsed and compiled on the main thread. When the main thread is busy, the page can’t respond to user input. […] JavaScript is also executed on the main thread. ”According to the Google Lighthouse report, the main-thread work added an additional whopping 10.2 seconds to the load time. This time includes parsing, compiling, and executing JavaScript. Additionally, the site carried a network payload of 4327 KB — definitely not optimal. The larger the amount of JavaScript, the longer the download times, the bigger the network cost, and unfortunately, the more unpleasant the user experience.

So in what ways can we reduce the size and time of main-thread work?

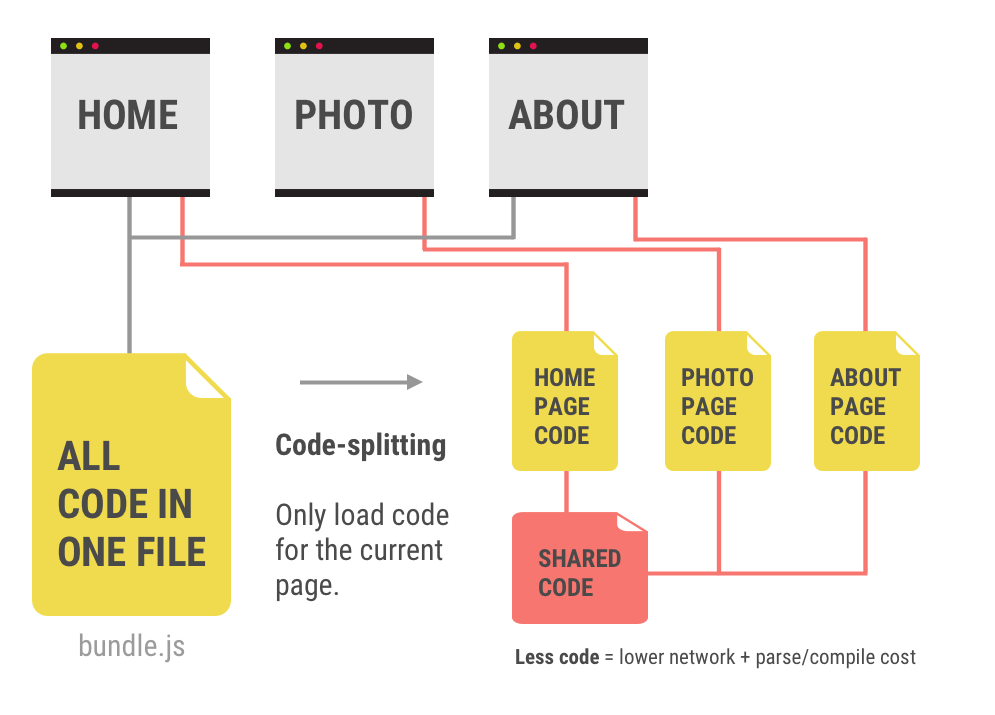

Code Splitting

If you try to eat a whole sandwich all at once, it will probably take you longer to eat than if you take one small bite at a time and digest each bite in sequence. Similarly, if you deliver all of your JavaScript in one massive heap, it is going to take a long time for the compiler to first read all of that code, and then process all of that code in the execution environment to render the web page. Code-splitting is a simple way to deliver JavaScript in smaller packages such that it can speed up the parsing and compiling time, then ship each package to the execution environment. With smaller chunks of JavaScript, you can reduce load time and improve web performance.

Three different ways of code-splitting:

- Vendor splitting is separating anything in your code that you can consider a “dependency” or a third party source into a separate folder, conventionally “/vendor”. If there are any changes made to your app code or vendor code, they can be handled separately without disrupting the other.

- Entry point splitting is recommended for applications that are not distinctly set up with server side routing and client side routing. This means splitting code upon the initial build of a dependency by tools like webpack.

- Dynamic splitting is recommended for single page applications wherever dynamic “import()” statements are used.

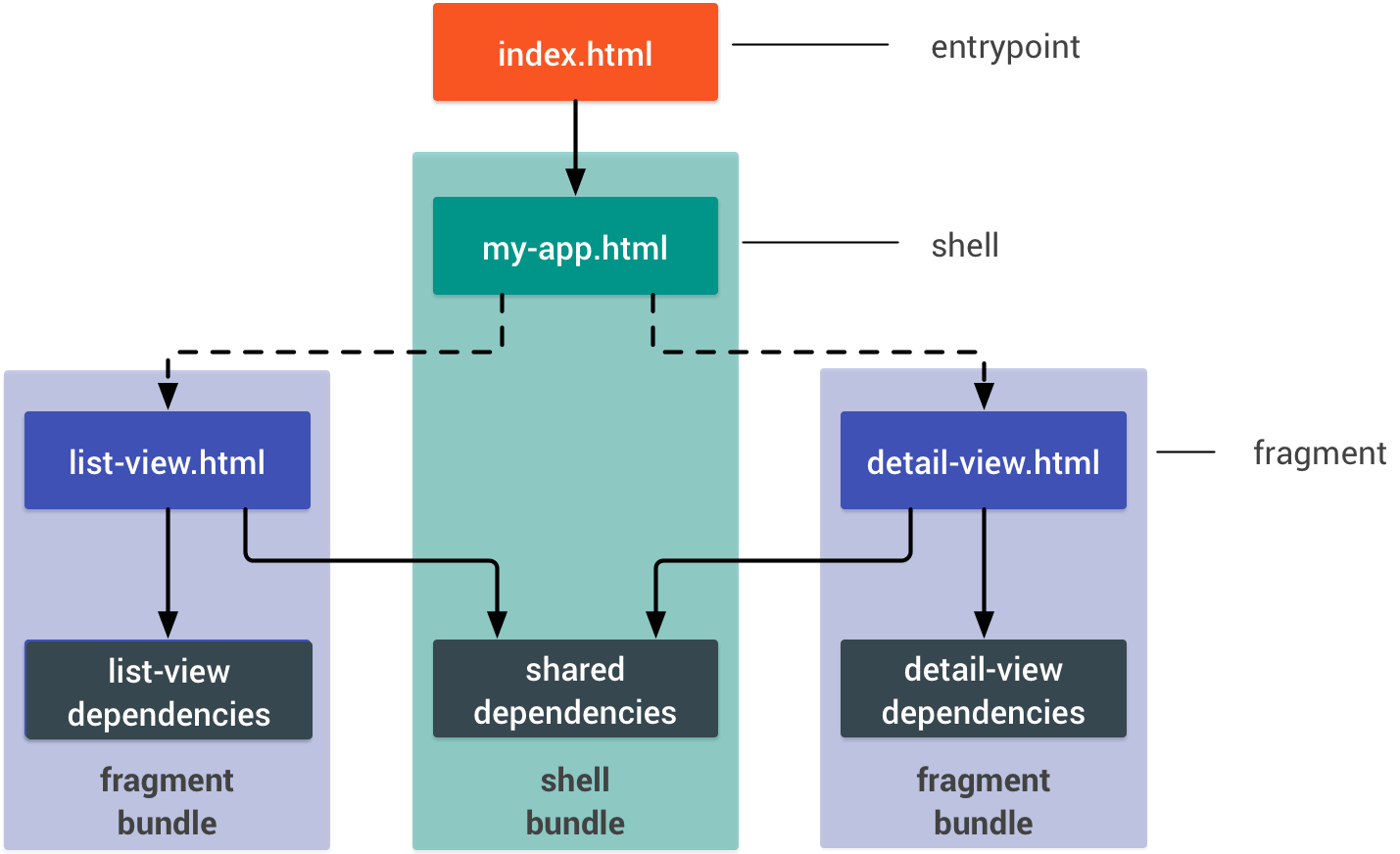

PRPL

- Push critical resources for the initial URL route.

- Render initial route.

- Pre-cache remaining routes.

- Lazy-load and create remaining routes on demand.

The Google documentation explains PRPL effectively and efficiently, but to give you a quick rundown, PRPL is best used with the following app structure:

- the entry point: index.html — should be considerably small to minimize RAM usage

- the shell: top level app-logic, routing, main UI, static dependencies

- fragments: anything not immediately visible at DOM Content Loaded. This is where lazy-loading comes into play.

Other ways of reducing payload include minifying JS, HTML, and CSS by using compressors, using text compression such as GZIP, and choosing optimal image file types and compression levels.

Lazy Loading

Upon scrolling into the viewport, a placeholder image is first shown and quickly replaced with the actual image

Lazy loading is simply what it sounds like — it will be ready to load, but at a later time when you need it. A quick way to save data and processing time is by deferring any resources until they come into the viewport. For images, you can use event handlers such as “scroll” or “resize”, but on more modern browsers, the intersection observer API is available for use.

In both cases, you are specifying some kind of indicator to let the code know when a resource is in the viewport. You can declare a “lazy” image url, and the actual image url, simply by giving your image tag a class of “lazy”; for background images for divs, use classes “.lazy-background” and “.lazy-background.visible”. As expected, these lazy loading libraries exist to help accelerate the implementation of lazy loading, so you do not have to thoroughly investigate what goes on behind the scenes. Don’t you love how developers help make developers’ lives easier?

Conclusion

The PRPL pattern is the golden standard especially for mobile development to minimize network payload and efficiently organize your code architecture. It pretty much implies that one should apply code-splitting and lazy loading for best practice. Code-splitting is wonderful for breaking up your code into manageable pieces for the execution environment to handle, thus decluttering the main-thread work. Lazy loading can save memory and reduce load time by calling upon fragments of resources, especially media file types, only when they are needed. With these three simple implementations, you can significantly reduce your time to interactivity, and therefore create a much better user experience.- Only send the code that your users need.

- Minify your code.

- Compress your code.

- Remove unused code.

- Cache your code to reduce network trips.

- — developers.google.com

下载开源日报APP:https://openingsource.org/2579/

加入我们:https://openingsource.org/about/join/

关注我们:https://openingsource.org/about/love/