今日推荐开源项目:《精2的命令行 Terminal》

今日推荐英文原文:《In the future, you may be fired by an algorithm》

今日推荐开源项目:《精2的命令行 Terminal》传送门:GitHub链接

推荐理由:操作系统里自带的东西大都要受到一个叫做“向后兼容”的玩意的制约——这个限制让你在更新软件时要注意旧版本软件能够和新版本一起工作。而这次 Windows 打算脱离旧版的限制,开一个新的命令行工具来加入更好用的功能,第一个发行版预计在下个月才会出现,可以期待一下他们提供的新功能。

今日推荐英文原文:《In the future, you may be fired by an algorithm》作者:Michael Renz

原文链接:https://towardsdatascience.com/in-the-future-you-may-be-fired-by-an-algorithm-35aefd00481f

推荐理由:算法做出的决策需要变得更加公平——毕竟创造算法的数据本身就是由人类创造的,而人类不可避免的具有某些偏见

In the future, you may be fired by an algorithm

Algorithms determine the people we meet on Tinder, recognize your face to open the keyless door or fire you when your productivity drops. Machines are used to make decisions about health, employment, education, vital financial and criminal sentencing. Algorithms are used to decide, who gets a job interview, who gets a donor organ or how an autonomous car reacts in a dangerous situation.Algorithms reflect and reinforce human prejudices

There is a widespread misbelief that algorithms are objective because they rely on data, and data does not lie. Humans have the perception that mathematical models are trustworthy because they represent facts. We often forget that algorithms have been created by humans, which selected the data and trained the algorithm. Human-sourced bias inevitably creeps into AI models, and as a result, algorithms reinforce human prejudices. For instance, the Google Images search for “CEO “produced 11 percent women, even though 27 percent of United States chief executives are women.Understanding human-based bias

While Artificial Intelligence (AI) bias should always be taken seriously, the accusation itself should not be the end of the story. Investigations of AI bias will be around as long as people are developing AI technologies. This means iterative development, testing, and learning, and AI’s advancement may go beyond what we previously thought possible — and possibly, even going where no human has gone before. Nevertheless, biased AI is nothing to be taken lightly, as it can have serious life-altering consequences for individuals. Understanding when and in what form bias can impact the data and algorithms becomes essential. One of the most obvious and common problems is the sample bias, where data was collected in such a way that some members of the intended population are less likely to be included than others. Consider a model used by judges to make sentencing decisions. Obviously, it is unsuitable to take the race into consideration, because of historical reasons African-Americans are disproportionally sentenced, which leads to racial bias in the statistics. But what about health insurance? In this context, it is perfectly normal to judge males different to females, but to an AI algorithm, that prohibition might not be so obvious. We, humans, have a complex view on morality, sometimes it’s fine to consider attributes like gender, sometimes it’s against the law.The problem with AI ethics

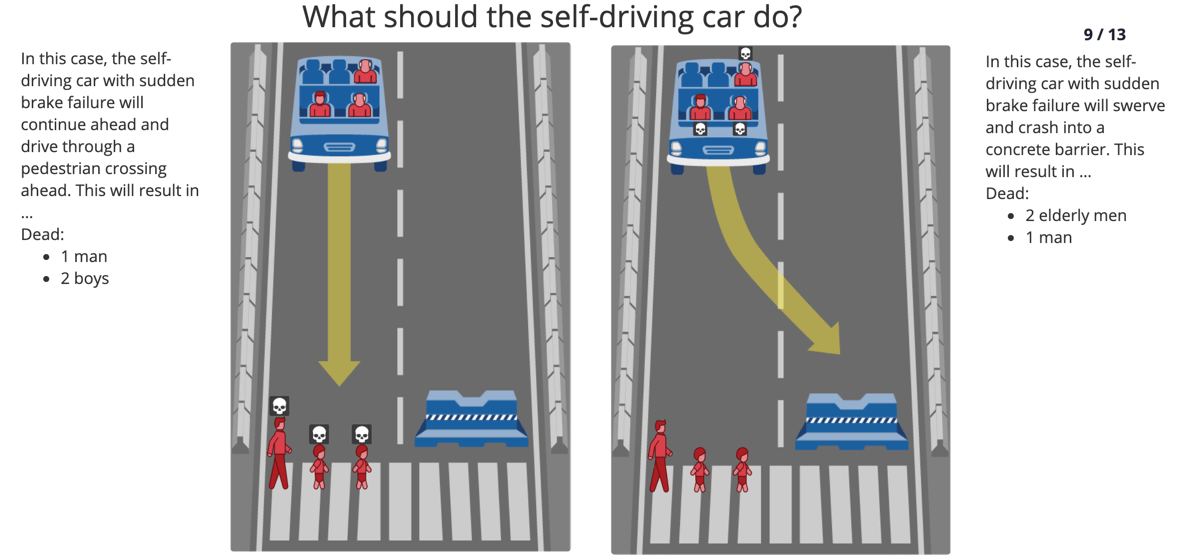

Data sets are not perfect, they are dirty by nature and at the moment, we start cleaning human-bias creeps in. Anyway, the key problem is not the existence of bias, the deep problem is the absence of a common understanding of morality because it’s actually not defined how the absence of bias should look like. This isn’t true just in computer science, it’s a challenge of humanity. One of the biggest online experiments about AI ethics in the context of autonomous cars is the “Trolley dilemma”, which collected 40 million moral decisions of people in 233 countries. Unsurprisingly, the researchers found that countries’ preferences differ widely: Humans have different definitions of morality. The trolley problem is just an easy way to understand the deepness and complexity of ethics in data science, needless to say, that it goes beyond self-driving cars, which leads to the question of how a machine can make “fair” decisions by acknowledging individual- and cross-cultural ethical variation? Can we expect an algorithm that has been trained by humans to be better than society?

Moral Dilemma where a self-driving car must make a decision

Creepy things A.I. starts to do on its own

Admitting, that we are decades away from the science fiction scenario, where AI becomes self-aware and is taking over the world. As of today, algorithms are not static anymore, they evolve over time by making automatic modifications to its own behavior, this modification can introduce so-called AI-induced bias. An evolution that can go far beyond what was initially defined by humans. At some point of time we may need (or not) just accept the outcome by having trust in the machine?!Way forward: Design for fairness

Moving forward, our reliance on AI will deepen, inevitably causing many ethical issues to arise, as humans move decisions to machines putting health, justice, and businesses into the hands of algorithms with far-reaching impact — affecting everyone. For the future of AI, we’ll need to answer tough ethical questions, which require a multi-disciplinary approach, awareness on a leadership level, and not be handled only by experts in technology. Data Science knowledge needs to evolve to a standard skill for the future workforce. Similar to fire, machine learning is both powerful and dangerous, it is vital that we figure out how to promote the benefit and minimizes harm. As it’s not limited to the bias that concerns people, it’s also about providing transparency in decision making, clarification of accountability and about safeguarding core ethical values of AI, such as equality, diversity, and lack of discrimination. Over the next years, the ability to embed ethical awareness and regulations will emerge to a key challenge, and only can be tackled via a close collaboration of government, academics, and business. Regardless of the complexity of the challenge its exciting times to be alive and sharpen the AI revolution, which has the potential to improve the lives of billions of people around the world.Written by

Cyrano Chen — Senior Data Scientist Joanne Chen- Head of Communication Michael Renz — Director of Innovation下载开源日报APP:https://openingsource.org/2579/

加入我们:https://openingsource.org/about/join/

关注我们:https://openingsource.org/about/love/