今日推薦開源項目:《來點音樂 awesome-music》

今日推薦英文原文:《A.I. Demilitarisation Won』t Happen》

今日推薦開源項目:《來點音樂 awesome-music》傳送門:GitHub鏈接

推薦理由:這個項目是一個關於音樂的各種方面的列表——音樂的編輯器,音樂的庫,音樂的作曲工具,音樂的理論教學……如果你正好對音樂感興趣的話,這個項目里肯定會有你感興趣的東西。不管是想要作曲,學習理論或者是找一些庫來用都是做得到的。或者說你也可以在最後找到一些關於音樂的其他列表,比如音頻可視化這類的,興許它們也能滿足你的需要。

今日推薦英文原文:《A.I. Demilitarisation Won』t Happen》作者:Josef Bajada

原文鏈接:https://medium.com/@josef.bajada/a-i-demilitarisation-wont-happen-92bcf6d4bc7d

推薦理由:相信大家都可能在各種科幻電影或者動漫中見過 AI 兵器了,興許這樣的事情會發生在未來

A.I. Demilitarisation Won』t Happen

Artificial Intelligence is already being integrated in next-generation defence systems, and its demilitarisation is highly unlikely. Restricting it from military use is probably anyway not the smartest strategy to pursue.

Photo by Rostislav Kralik on Public Domain Pictures

This year』s World Economic Forum』s annual meeting is about to start. While browsing through this year』s agenda, I couldn』t help but recall last year』s interview with Google』s CEO, Sundai Pichai, who was quite forthcoming and optimistic about how he envisages A.I. to change the fundamental ways of how humanity operates.

Indeed, the automation of thinking processes, combined with high speed communication networks, instant availability of data, and powerful computation resources, has the potential to affect all mechanisms of society. A.I. will play a key role in the decision making, day-to-day running and automation of health, education, energy provision, law enforcement, economics, and governance, to name just a few.

Like most other innovations, each technology advancement can be misused for evil purposes, and A.I. is no different. What scares most people is its elusive nature, being such a broad field, and playing a central role in so many science fiction plots that don』t end quite well.

In his interview, Sundai tried to quell such concerns by proposing that countries should demilitarise A.I. Of course, one should take such comments in context. But expecting such a thing from all countries is like expecting mankind to stop doing war altogether.

The reason I emphasise all, is because it just takes one country to create an imbalance. What if North Korea decides to integrate A.I. in its ICBMs for high precision autonomous guidance? Is it realistic to expect the U.S. to refrain from doing the same to counteract such threats? It is Mutual Assured Destruction all over again.

In such a situation you can only achieve a nash equilibrium if both parties have more to lose when they adopt a more aggressive dominant strategy and the opponent retaliates. One would expect the CEO of one of the world』s largest A.I. focused companies to be at least aware of such a fundamental concept of Game Theory.

Photo Credits Wikimedia Commons

But of course Sundai is fully aware of this. His utopic vision of a harmonious inter-country peaceful collaboration for the application of A.I. is just playing to the tune of his employer』s agenda. Ironically, this took place at the start of the same year when its 「don』t do evil」 motto was removed, its own employees walked out in protest over its participation in military projects, and people started to get seriously concerned about the availability and misuse of their private information such as emails and mobile phone data.

Maintaining a balance of power on opposing sides is already a good enough reason not to impose restraints on one side, without any concrete guarantees that the other side is actually doing the same. But here are some more reasons why A.I. demilitarisation will not happen, and why we probably do not really want it to happen in the first place.

Funding

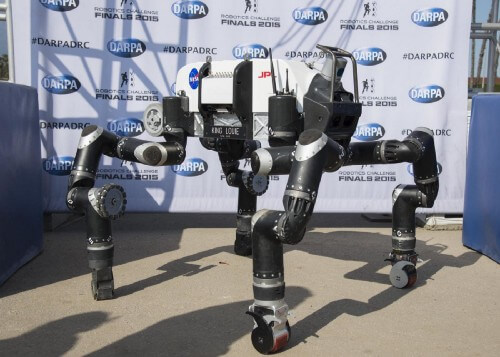

Defence budgets drive a lot of research and innovation in western countries where having a strong military capability is considered critical. They don』t just fund the construction of missiles and stealth fighters. They support the development of ideas that often do not yet have any commercial business model yet, but are still worth having before competing nations do. Of course it is more likely that ideas with clear military application get funded from defence budgets. But technologies such as the Internet (originally ARPANET) and the Global Positioning System started as defence projects and eventually got released to the public.Autonomous vehicles may have become more popular due to Google』s self-driving car project (now Waymo), but in actual fact this was the result of a grand challenge by the US Defense Advance Research Projects Agency (DARPA). Google then recruited people from the team that won the 2005 challenge. DARPA also held a robotics challenge, with the objective of developing humanoid robots that are capable of performing dangerous tasks in environments that are too harmful for humans. One only needs to recall the Chernobyl and Fukushima disasters, and the consequences of people working in radioactive environments to deal with their aftermath, to realise how useful such a technology could be.

JPL』s RoboSimian at the DARPA Robotics Challenge

DARPA takes A.I. very seriously, and similarly other defence organisations such as BAE Systems and Thales. Expecting the military to fold its hand and distance itself from A.I. is plain unrealistic.

A.I. Can Reduce Casualties (on both sides)

One of the main concerns about the use of A.I. in the military is the development of killer robots. This is a legitimate concern that needs to be addressed through the right policies and treaties, although I doubt that if the technology components were available, any country would publicly admit to having autonomous weapons (until it is constrained to bring them out and use them). Furthermore, if it becomes possible for one nation to obtain such a technology, it is probably a very bad idea to leave the playing field unbalanced.Given that Artificial General Intelligence is still very far off, and a 「Terminator-style」 killer robot is unlikely, maybe we should focus more on the immediate benefits of A.I., such as Battlefield Intelligence. What if a soldier』s visor was equipped with augmented reality that uses A.I. to identify friend from foe, or civilian from threat, more accurately than an adrenaline pumped human fearing for his life? Autonomous armoured vehicles can be used for high risk missions, like delivering supplies through a hostile route, or extracting casualties from an active combat zone. Robots could accompany soldiers and assist them in investigating threats or carrying heavy equipment.

Photo by Sgt. Eric Keenan

What if missile guidance systems were equipped with on-board algorithms that verified their targets before detonation? 「Surgical strikes」 would just hit their intended targets, instead of causing collateral damage and innocent civilian deaths. What about smarter air defence systems that make use of A.I. to track and intercept incoming airborne threats, or even detect them before they are actually launched?

A.I. is not Well Defined

Most A.I. techniques involve crunching data streams, applying mathematical techniques such as calculus, probability and statistics, and putting them together using a handful of algorithms to arrive to a solution. There is nothing really cognitive, sentient or self-aware going on.Unlike nuclear weapons, where you can clearly determine whether a country has stockpiles or not (assuming you can find them), there is no clear way to classify the software technology that is integrated in the various defence systems. Furthermore, one can』t really expect the military to refrain from using the data and computing resources it has available to obtain faster, smarter, and machine-verified decision support, while the general public has it at their finger tips. A.I. demilitarisation is just not happening.

下載開源日報APP:https://openingsource.org/2579/

加入我們:https://openingsource.org/about/join/

關注我們:https://openingsource.org/about/love/