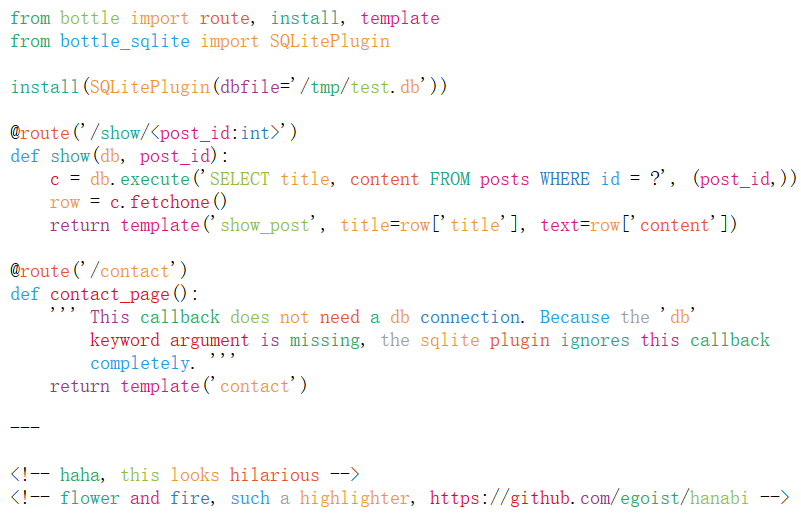

今日推荐开源项目:《放烟花 hanabi》

今日推荐英文原文:《Is Code Coverage a Useless Metric?》

今日推荐开源项目:《放烟花 hanabi》传送门:GitHub链接

推荐理由:众所周知,烟花是五颜六色的,又众所周知,现在没有人放烟花。不过把这兴头拿来放在别的地方的话,就有了这个项目。这个项目用一种极其五颜六色的方法高亮你的代码,让它们看起来就像没有规律的彩色烟花一样(你不会想整天对着五颜六色的工作用代码的),尽管就实用性来说很emmmmm……但是娱乐性可以说是拉的很满了。

今日推荐英文原文:《Is Code Coverage a Useless Metric?》作者:Jamie Morris

原文链接:https://medium.com/better-programming/is-code-coverage-a-useless-metric-bc76e0fde9e

推荐理由:测试是要确保没有问题或者找出问题才叫做测试

Is Code Coverage a Useless Metric?

Should you really be going for 100% test coverage?

Many are the smug developers who laud their high coverage over their peers. And many are the cynics who sneer at the mention of any such metric.I’d like to think that most of us sit somewhere between those two positions, recognizing the value of metrics without becoming a slave to them.

I recently mentioned “100% test coverage” in a sentence. I forget the exact context, but I believe I was pointing out that if you follow TDD to the letter, it should be impossible not to get 100%.

A colleague stood up to peer over his monitor at me, something primal in his brain, angered by the mention of “100% test coverage”. We had a friendly debate on the subject, and I resolved to write down what we discussed.

Metrics

Test coverage is a by-product of good practice, and as such, your development team probably shouldn’t focus on it.As a developer, you probably know already if you are testing enough, so having a metric that confirms or refutes that belief is unnecessary. If the metric says that you aren’t testing enough, but you believe you are, should you write more tests? Probably not.

Your boss, on the other hand, or their boss, shouldn’t have to get into the weeds to understand if your code is robust.

Like it or not, your boss wants to know that you are doing a good job. This probably includes testing your code, which I say on the assumption that your boss values automated testing.

Let’s not dwell on the possibility that your boss does not value tests, since coverage isn’t going to help in that case.

If anyone (your boss included) wants to know how well you test your code, they have three options:

- Talk to you about it.

- Check your code for themselves.

- Look at some kind of high-level metric that gives them a rough idea.

I challenge any developer to convince me that they don’t need tests for new functionality.

I’ve also worked with people who were so desperate to not deliver bad news that they outright lied about the quality of their tests. It turns out there were no tests.

Option two is unrealistic for many people. Stakeholders are allowed to care about testing, since it represents a huge safety net if done properly. But most stakeholders probably lack the technical expertise, time, and experience to read through a suite of tests and assess their quality.

This is where metrics come into play. Test coverage does not assure high-quality code any more than the quality of a movie is assured by how much money it makes. In both cases, the given metrics are indicators only, and used by the powers that be to make decisions.

The true value of code coverage is as an indicator. Just like high profits indicate a good movie, high code coverage indicates a well-tested codebase.

There are exceptions, of course.

Transfomers and its many (many) sequels are huge box office successes, yet many films in the series are heavily panned by viewers. At the same time, there are countless movies for whom financial success was elusive, yet have received universally critical acclaim. Fight Club, for example, is regarded as a classic, yet had an underwhelming run at the box office.

But we’re talking about code quality, not movies. When I see code coverage of 90%, I might reasonably assume that the project is well-tested. When I see coverage of 10% I might reasonably assume it is not.

If I am a senior leader, low coverage might tell me that my teams don’t feel they have the time to write tests. It might indicate that teams are chasing deadlines or that they don’t know how to test their code.

This might allow me to make adjustments for the good of the team and the project — the metric becomes a starting point for a conversation.

The harder problem to spot is when high code coverage masks poor quality. I don’t often see this, but it does happen. Usually it happens when inexperienced developers work alone without peer review.

The best cure for this kind of behavior is pair programming, though most teams settle for code reviews.

What Does High Coverage, Low Quality Look Like?

Poor quality tests might take many forms, but most commonly, they have very few assertions. Such tests still have some value as smoke tests, but can often mask problems that are harder to spot.On one occasion, I was reviewing a pull request in which the tests were all passing and the coverage was above the arbitrary threshold set in the build pipeline.

One test had a mock created during test setup, and the only assertion was against the output of the mock. That highlighted to me that the developer didn’t understand the purpose of mocks, and when I talked to him, he was fairly critical of his own code, but even more critical of the process.

To him, the crappy tests were pointless because they only asserted on the outcome of the mock, but were necessary to pass the coverage tollgate.

Given how many pull requests don’t get properly reviewed (especially the tests), I suspect there are more examples I could find, and wonder how much of the cynicism around testing rests on poor assumptions.

In the example above, I worked with the developer in question to rewrite his tests to understand the purpose of unit tests. I’m sure this is old news to many, but it doesn’t hurt to remind ourselves once in a while.

- A unit test should test a single class/function (the unit).

- The unit should be small enough that it is easy to test — if you find yourself testing dozens of different permutations of the same unit, this might indicate that your unit is doing too much and should be broken into smaller units (the single responsibility principle).

- You should mock dependencies outside of the unit, such as API calls or other units that interact with this one (for instance, when writing a component that contains several sub-components, you probably ought to mock the sub-components and test them elsewhere).

- You should not mock anything inside the unit — this is a smell that usually means you should refactor something out of the class.

- Your assertions should not care about private properties or methods — only test the outputs of your unit, not the internals.

it('should get the customers from the service', () => {

const testData = CUSTOMER_FACTORY.getArray(10);

const mockService = {

getCustomers: () => testData

};

const unit = new CustomerListComponent(mockService);

expect(unit.customers).toBe(testData);

});

it('should display the customers from the service', () => {

const testData = CUSTOMER_FACTORY.getArray(10);

const mockService = {

getCustomers: () => testData

};

const unit = new CustomerListComponent(mockService);

const html = unit.render();

const listItems = html.querySelectorAll('li.cusomer');

expect(listItems.length).toBe(testData.length);

testData.forEach((customer, i) => {

expect(listItems[i].textContent).toContain(customer.name);

});

});

The exception is when you are writing an API for others to consume. At that point, your user is another developer and you might care about properties being set, since the user/developer will need those properties.

But again, you ought to only test the public properties, since they form your contract with the user. Don’t go testing the internals of your unit.

There are, of course, many ways to write “bad” tests, but most of the time even a “bad” test is better than none at all.

There are exceptions, I’m sure, but for the most part, the fact that you are writing tests at all is a huge win for your customer and future-you.

Metrics = Suspicion

I see many developers who become suspicious when anyone outside the team asks about code coverage. “Why do you need to know?” is often the knee-jerk response.In some respects, this is justified, but it very much depends on the motives of the external party. Developers don’t want to feel their metrics will be used as a cudgel to punish them, nor do they want to feel that they have to “play the game” to appear competent.

This speaks of a distrust between teams and the rest of the world. “They” want to rank every team and punish those at the bottom. “Other teams” will game the system by writing poor quality tests to increase coverage and appear competent.

“We” will appear less competent than we actually are in comparison because “we” have too much integrity to chase a metric.

I haven’t had the displeasure of working under anyone who actually viewed coverage as a ranking system or an absolute measure of quality.

I’ve no doubt that such people exist, but I’m sure that many of those could be talked around. If you know anyone in that category, point them at this article, for starters.

The Best Kind of Test Is a Failing Test

Remember that the purpose of your test is to tell you when stuff is going wrong. In that respect, a test that always passes is nice to have, but ultimately useless.That same test becomes useful as soon as it fails. Assuming that it didn’t fail because you changed the test itself, the test failure indicates a problem with your implementation.

It’s much better to have your test alert you to the problem than for a customer to discover it (or worse, for the problem to go unnoticed, subtly undermining your application in small ways).

If my test was a person, I would take it out for a celebratory drink once the problem it flagged up is fixed.

Get to the Point!

Oh yeah, code coverage. Well, I look at it like this: I can pretty easily hit 100% coverage and still not have enough tests. All 100% means is that every line of my code was run at least once. It could still be gibberish.A more pessimistic developer might use this as an argument for why 100% is a stupid target. I guess that’s right in a way, but what makes 80% any better? What is in the extra 20% that you aren’t testing?

I prefer to exclude code that I don’t want to test, and then aim for 100% on everything that’s left. That way, I’m being explicit about the bits I do and don’t want tested.

My Java developer friends, of course, point to getters and setters as examples of code that doesn’t need to be tested, because they are auto-generated, and testing them just to hit a metric is a waste of time.

To that, I say: “Come and write JavaScript instead — it’s what all the cool kids are doing.”

下载开源日报APP:https://openingsource.org/2579/

加入我们:https://openingsource.org/about/join/

关注我们:https://openingsource.org/about/love/