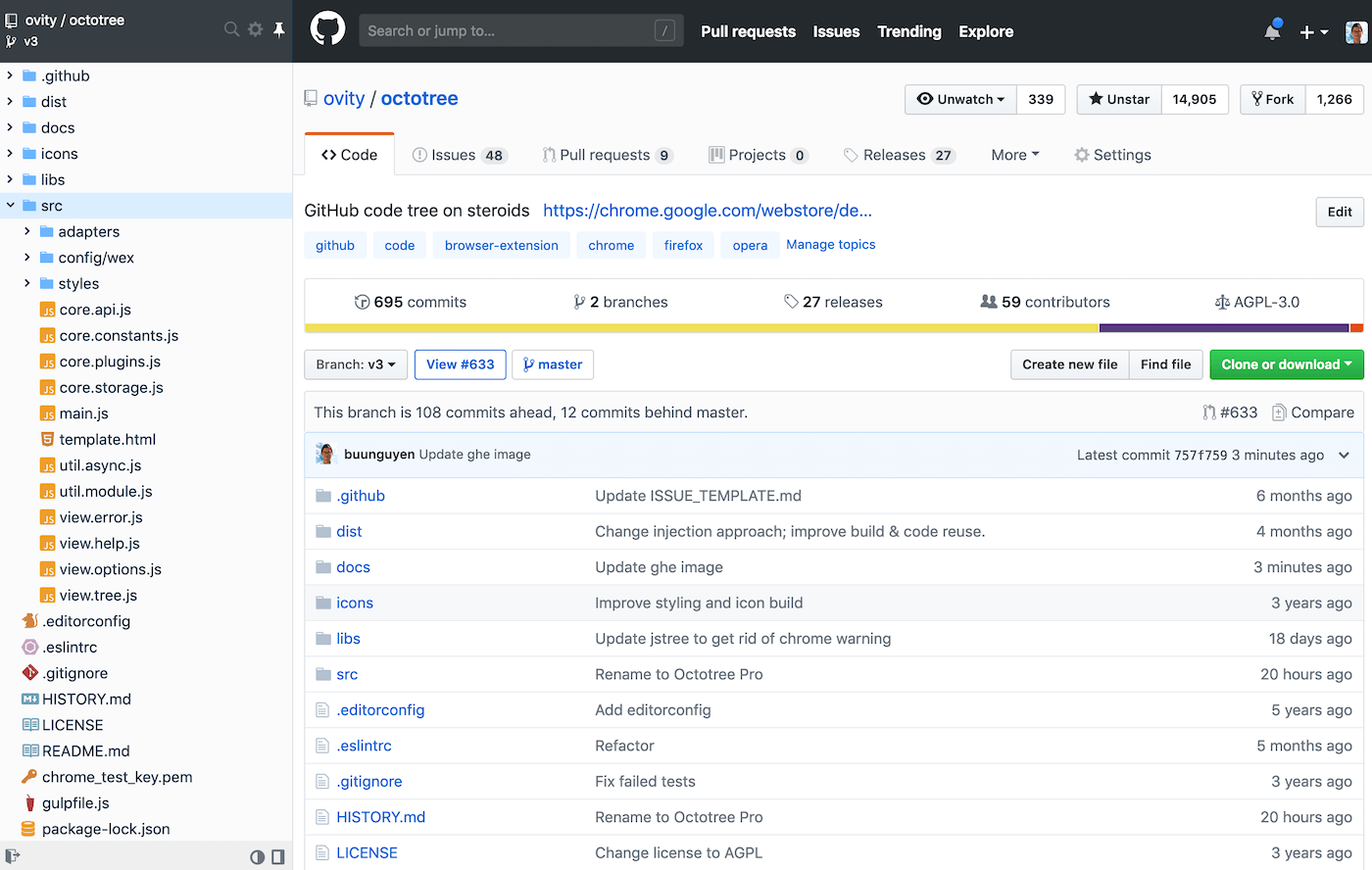

今日推荐开源项目:《代码树 octotree》

今日推荐英文原文:《A Guide to Building Your Own Technical Interview》

今日推荐开源项目:《代码树 octotree》传送门:GitHub链接

推荐理由:在使用 GitHub 的时候如果想要了解仓库的结构,最好的方法就是把仓库变成本地文件——浏览器上要一个个的点开是很麻烦的。但是现在这个项目可以解决这个问题,它会像自己平时写代码时一样在浏览器上展开 GitHub 仓库的代码树,在阅览仓库的时候可以省下不少功夫。

今日推荐英文原文:《A Guide to Building Your Own Technical Interview》作者:CSPA

原文链接:https://medium.com/@cspa_exam/a-guide-to-building-your-own-technical-interview-95c32f1d43d2

推荐理由:终于有一天你也有足够的能力了,然后轮到你去面试那些新人,这篇文章就是介绍面试别人的方法

A Guide to Building Your Own Technical Interview

What’s the difference between Fizz Buzz and a Traveling Salesman variant? What about whiteboarding, live coding, and take-homes? Is it merely “difficulty” and format? Or is it something more that most interviewers don’t think about? Unknown to some, having technical skills is quite different from assessing technical skills. In other words, being a great engineer does not automatically imply competence in interviewing for great engineers. Realizing this is the first step to designing good technical assessments.

In this post we will cover the many different ways to assess technical skills. Whether you’re a company looking to hire new talent, a recruiter looking to screen potential clients, or even a coding school looking to write an entrance exam, this post will help you better achieve your goals.

Unknown to some, having technical skills is quite different from assessing technical skills. In other words, being a great engineer does not automatically imply competence in interviewing for great engineers. Realizing this is the first step to designing good technical assessments.

In this post we will cover the many different ways to assess technical skills. Whether you’re a company looking to hire new talent, a recruiter looking to screen potential clients, or even a coding school looking to write an entrance exam, this post will help you better achieve your goals.

Motivation

It’s not uncommon to hear programmers’ frustrations with the current state of the interview process [1] [2] [3]. If you want to hire top talent, you have to understand the pros and cons of how you’re interviewing your candidates. No technical assessment is perfect, and you need to account for the imperfections in your own. Another motivation is maintaining a professional persona. Poor interviews can cause your candidates to lower their perspective of your company. Instead, you should aim to give an excellent interview — excellent enough for your candidates to recommend your company to their colleagues, even if they don’t fit the role. Lastly, you don’t want to design a technical assessment without any kind of study. If you do, you’ll almost certainly design an assessment with problems— uninformative results, false negatives, and even unintentional bias.Overview

After going through this post, you should be more proficient in:- Articulating the purpose behind each technical question you ask

- Specifying what it means to answer a question correctly / incorrectly

- Minimizing the bias as you design your problems and questions.

- Two Conflicting Approaches to Designing Assessments

- Common Types of Technical Interviews

- Assessment Design Guidelines

- Putting Your Technical Assessment into Practice

Two Conflicting Approaches to Designing Assessments

Not all assessments have the same goals. Consider a college math professor grading final exams. The professor has two main approaches to choose from: a normative assessment, and a qualification assessment.Normative Assessments

The method of a normative assessment is to pit students against each other, usually by the use of a curve. This type of assessment aims to be high enough in difficulty to achieve a normal distribution of graded scores, thereby limiting the number of students who get top marks. When it comes to the software industry, everyone wants to hire “the best”. But issues arise when attempting to put this into practice:- What is “the best” in software engineering, and how do you quantify it?

- Upon deciding how to quantify it, unless you have a standardized platform like CSPA, a normative technical assessment is probably impractical; you need a large number of test-takers b+re your data becomes informative.

- Even if you do use a standardized assessment platform, unless you are a large corporation, you may not be able to afford the top X% in the first place. A qualification assessment (explained in the next section) might better suit your needs.

Qualification Assessments

Instead of comparing against peers, a qualification assessment tests whether or not a candidate is ready for a specific skill or role. Aside: Qualification assessments correspond to criterion-referenced tests in academia. Qualification assessments have several benefits:- They can be directly related to the role you’re hiring for.

- Their focused scope makes them easier to write and maintain.

- They are less likely than normative assessments to push away good candidates.

Common Types of Technical Interviews

Although there is currently no standard for technical interviews, you’ll find many patterns if you study industry trends. Here’s a list of some of the most common formats, ordered from least to most useful (in our opinion):- Whiteboard Problems

- Technical Details

- Live Coding

- On-site pair programming

- Take-home Projects

Whiteboard Problems

Whiteboard problems — also known as whiteboarding — is one of the lowest candidate-rated interview formats, yet is among the most frequently used. Whiteboard problems assess a candidate on their ability to solve a problem “on the spot”, using only a whiteboard and a dry-erase marker, all within a limited amount of time. Candidates are typcially not allowed to use research tools, although sometimes they can ask the interviewer questions. Whiteboarding attempts to assess a candidate’s problem-solving skills. However, due to its constraints, this goal is hard to meet:- Limited time. Whiteboard sessions are usually 30–60 minutes. If a candidate does well, it could be due to their problem-solving skills, but could also be because they’ve seen a similar problem before. If a candidate does poorly, that doesn’t imply they have poor problem-solving skills; they may have done well in more realistic conditions that allow more time, uninterrupted pondering, use of Google, etc.

- Subjective impression. Defining a useful, objective metric for a whiteboard problem is difficult. If the metric is “they solved the problem”, then you are filtering for candidates who have seen your type of problem before. If the metric is to “see how they think”, then you are filtering for candidates who appeal to the subjective impression of the interviewer.

- Low Relevance Unless the role involves a high amount of algorithmic problem solving, whiteboarding may be filtering for irrelevant skills. This is often demonstrated by how much an interviewer has to study before conducting a whiteboard session.

Technical Details

The technical details format intends to gauge how well a candidate knows a technology that they will likely be working on. The idea is, if a candidate can answer questions sufficiently, then they have competence in that subject. This format has its benefits:- Low commitment. This format is quick and simple to execute.

- Least resistance. No candidate will think this format is unreasonable.

- Effective screening. This highly depends on how skilled the interviewer is at asking questions, however.

- Low information. Talking about coding is different from actual coding. It’s possible someone can talk well but not program well.

- Subjective impression. The assessment boils down to “do they sound like they know what they’re talking about?”

- Reliance on familiarity Because the tech world is vast with knowledge, there is no way one can know everything about anything. It’s possible that two experts in one domain may know very different things. Thus, a candidate not knowing the answer to a question does not necessarily imply lack of expertise.

- Vulnerable to inconsistency. Is the interviewer asking quality questions or technical trivia? Is the interviewer following a standard script to maintain consistent assessment across candidates?

Live Coding

Live coding is an interview format where candidates are presented a programming problem, and then code the solution in real-time. This is most often done remotely. This format offers several benefits:- Real coding. Unlike whiteboarding or abstract discussions, you get to see the candidate create real code.

- Available tools. A candidate can have access to tools like Google, making the coding session more realistic.

- Live debugging. You can see how a candidate responds to bugs and how they go about fixing them.

- Familiar Environment. Depending on how you conduct the session, the candidate may have access to their own text editor, and not be hindered by a foreign machine or an in-browser setup.

- High pressure. Despite having relevant experience and skills, some engineers simply cannot perform under the specific type of stress in a live coding environment.

- Unfamiliar environment. Some online live coding solutions don’t account for editor environments, which will make your candidate seem slower than they really are.

- Low relevance. Companies often ask Project Euler-type puzzles, causing similar issues as other formats.

- Subjective impression. Similar to whiteboarding, if the metric is “they solved the problem”, then you are filtering for candidates who have seen your type of problem before (which is only bad if the problem is of low relevance). If the metric is to “see how they think”, then you are filtering for candidates who appeal to the subjective impression of the interviewer.

On-Site Pair Programming

On-site pair programming is like an improved version of live coding. As part of the interview process, candidates travel to the company for an on-site day of programming with current employees on production code. This format offers some good benefits:- Real coding. Instead of showing how a candidate codes in a vacuum, this format shows how they work with actual code that other people wrote.

- Face-to-face interaction. The candidate works directly with the team while programming, allowing for higher quality feedback and assessment.

- High relevance. The candidate works with real production code, so there is little chance the interview will be irrelevant.

- Lack of domain knowledge. Your candidate will not be familiar with your exact production setup. Depending on your business, candidates may only be able to work on trivial parts of the app. You also might not see effective debugging (unless they’ve worked with your exact stack before).

- Unfamiliar environment. Candidates will be hindered as they won’t be able to use their own machine / editor, unless you grant them access to your codebase.

- Higher commitment. Companies often want their candidate to spend a half or full day with the team. This is higher commitment for the team as well as the candidate.

Take-Home Projects

A take-home project is a format where a candidate receives a small project specification, perhaps with some starter code, and implements the spec remotely using their own machine and environment. Upon completion they submit their work to the company, either by email or source control. Take-home projects can be the most efficient way to evaluate a candidate’s technical ability — but with a catch. First the benefits:- Real Coding. Like the previous two formats, take-home projects produce real code you can evaluate and discuss.

- Less stressful (potentially). Many top candidates do poorly when live coding under constant watch. Take-home projects are a way for them to better demonstrate their skills.

- Higher relevance. This format gives opportunity to assign a project that’s more relevant to the job than Euler-style programming puzzles.

- Familiar environment. Because this format is done at home, candidates are not hindered by unfamiliar setups or environments.

- Higher commitment. This is the catch mentioned earlier. Remember that you’re competing against other companies. Take-homes take hours to complete, and not all engineers — seniors engineers, especially — have the time to code more than one.

- Higher cost. Take-homes are full projects; they require more time from your engineers to evaluate, as they should look for qualities that aren’t covered by automated tests.

- Difficult to scope. It’s almost too easy to add requirements that increase the time required to too much.

Assessment Design Guidelines

Now that we’ve covered some common technical interview formats, let’s move on to designing your own technical assessment. The primary goal of a technical assessment is to ensure technical competency. To do this you need to consider:- Choosing formats

- Defining your criteria

- Reducing potential bias

- Testing

1. Choosing Formats

As explained earlier in the post, your assessment should be a qualification assessment and not a normative one. You should also strive to choose formats that will yield the largest pool of candidates. To do this, you must take into consideration:- Relevance. Be sure to use techniques and ask questions relevant to the role, or candidates will be unhappy you’re wasting their time.

- Time commitment. Be conscious of how much time you’re asking for from your candidates. Too much time required will filter out good ones. For example, senior engineers are often older and have less time due to more life responsibility (family, kids, etc.)

- Stress. People respond to stress differently in different situations. A good candidate may do poorly in one interview format, but could excel in another.

2. Defining Your Criteria

Next you need to specify what a good candidate response looks like. This is a predefined list of relevant criteria that you use to judge each of your candidate’s technical responses. Similar to user stories, you need to define the purpose of each criterion you list. For example:Our designer role needs to know responsive CSS so that they can implement the designs they create.Writing each purpose like this will help you identify and remove criteria that may not be essential to the role you’re hiring for. This will save you time and money. For example, let’s say you are considering adding a criterion for gulp.js. You begin to write: “Our node.js engineer role needs to know gulp.js so that they can…”. Write gulp tasks? Use it on the command line? Perhaps this criterion is not as important as you first thought. After going through this process, you should have a list of criteria where each item is a specific point to test for in your assessment. Point System To reduce subjective bias, you should assign point values to each of your criteria. The following is a suggestion to model. Each criterion should have three defined outcomes:

- Full credit, where a candidate is awarded full points for the criteria,

- Partial credit, where a candidate is awarded a fraction of the full number of points (for example, half),

- And no credit, where the criterion is awarded zero points, due to the criterion being met close to not at all.

- [30 points] Responsiveness

- Full credit: Site is fully responsive from at least 320px in width

- Partial credit: Site does not gracefully handle some widths

- [30 points] Responsiveness

- Full: Site is fully responsive from at least 320px in width

- Partial: Site does not gracefully handle some widths

- [30 points] Use a CSS methodology

- Full: All CSS uses BEM, SMACSS, or OOCSS

- Partial: Only some CSS uses the above

- [20 points] Flexbox

- Full: An example of flexbox is used and works correctly

- Partial: An example of flexbox is used but has issues

- [10 points] CSS Tables (optional)

- Full: An example of CSS Tables is used and works correctly

- Partial: An example of CSS Tables is used but has issues

- etc.

3. Reducing Potential Bias

After you’ve defined and assigned points your list of criteria, you now need to re-review its effectiveness. Ask yourself these questions:- Assuming all non-technical requirements are met, if a candidate meets all my listed criteria, does that imply a job offer?

- Are there any criteria that may be unintentionally bias against certain groups, thus limiting your potential pool of candidates?

- Are there any criteria that may be bias favoring your own technical familiarity that might cause false negatives?

4. Testing

Before finalizing your technical assessment, you need to see how it fares against real engineers. Find a volunteer — perhaps a coworker, or someone you know — and have them run through your technical assessment as if they were a candidate. They will inevitably run into problems. Things like:- Unclear directions

- Given code not working as it should

- Broken links

- Incorrect automated tests

- Solutions you didn’t expect or want

- And so on.

Putting Your Technical Assessment into Practice

Designing a solid technical assessment is only the beginning. As you conduct it in the real world, be sure to keep the following things in mind.- Be explicit about your expectations. If you have a point important enough to reject a candidate over, you should tell the candidate about that point as part of the project requirements.

- Assign engineers who have interest in interviewing & grading. Nothing is worse than a conducting interviewer who doesn’t want to be there. Grading should be assigned to a single person for better consistency.

- Ensure a proper interviewer mindset. An interviewer should not try to make a candidate feel bad or inferior. Instead, they should strive to make candidates feel great about your company, even if the candidate does not get an offer.

- Think big picture. When evaluating candidate code, don’t pay attention to things such as conventions, spaces vs tabs, functional versus imperative, etc. The coding styles at your company can be taught to any competent programmer; a candidate doesn’t need to code that way naturally in the first place.

- Verify what you think are flaws. If a candidate does something differently than you would, don’t immediately conclude it’s inferior! Use it as an opportunity to ask and discuss their alternative approach.

Conclusion

Designing a technical assessment is difficult. Done properly, you will widen your pool of candidates, properly identify the best ones for your company, and make great hires. Done improperly, you will cause frustration, miss out on top talent, and might even damage your company’s reputation. We hope this guide will be useful to you as you construct better assessments, and ultimately better experiences, for your candidates. Thanks for reading!下载开源日报APP:https://openingsource.org/2579/

加入我们:https://openingsource.org/about/join/

关注我们:https://openingsource.org/about/love/

Good site you have got here.. It's difficult to find high quality writing like yours these days.

I honestly appreciate people like you! Take care!!