今日推薦開源項目:《設計大師 Awesome-Design-Tools》

今日推薦英文原文:《What Will a Robotic World Look Like?》

今日推薦開源項目:《設計大師 Awesome-Design-Tools》傳送門:GitHub鏈接

推薦理由:在各種各樣有關於設計的方面用得上的工具合集,包括設計用戶流程圖這些直接用於設計的工具和圖標,字體,顏色和動畫等等設計材料方面的工具等等。不管是在設計個人網站還是在開發應用上,相信這些工具都能或多或少的派上用場。

今日推薦英文原文:《What Will a Robotic World Look Like?》作者:A. S. Deller

原文鏈接:https://medium.com/predict/what-will-a-robotic-world-look-like-6fc838c54341

推薦理由:機器人與人類共存的世界會是什麼樣子的

What Will a Robotic World Look Like?

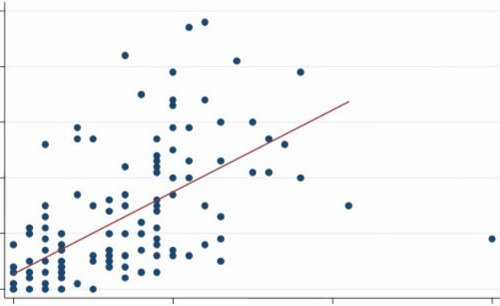

A fully-automated Earth in which robots work with humanity in every conceivable way has been imagined a million times over in science fiction books, film, games and television. According to our dreams, we might end up living in a world reminiscent of 「WALL·E」, in which machines assisted humanity in our environment-shattering quest for more-more-more that ruined the planet; a post-apocalyptic result of AI seeing Homo sapiens as a blight to be wiped out portrayed in 「The Terminator」; or an Earth and Solar System where robokind and humans exist together in a strange dichotomy of harmony and distrust as envisioned by the works of Isaac Asimov.If we were to place all of our imagined versions of a robo-enhanced future on a scatter plot, where the X axis is the timeline starting in the present (at 0) and going into the future (say, up to 1,000 years from now), and the Y axis represents the degree to which we get along with our robot companions as a percentage — with 0 being 「Robopocalypse」-level mutually-assured destruction and 100 representing some kind of perfect utopia in which humankind and machines exist together in perfect harmony or have otherwise blended together into a new species — it might look a little like this:

The majority of what many people expect in the nearer future tends to the darker side of things. We compare robotics and the research into artificial intelligence that usually accompanies that with other similarly large advances in technology that often had some scary results. Indeed, work on atomic power began with bombs, led by military programs, and that is where much of the headway is being made today with robotics.

Everyone working in robotics and AI today generally agrees that our creations need to be designed from the ground up with distinct rules in place for how they will regard human life. A robot』s AI must be able to unmistakably recognize humans and, at all costs, avoid harming them…

But then we are back to the militaries of the world being some of the primary organizations spearheading robotics work. As I write this, the United States, Russia, China and other countries are all actively running programs to create drones and AI-controlled battlefield robots expressly designed to kill people.

Robots similar to the one in the video below will, one day soon, be self-directed by onboard AI.

This contradiction is where the problem lies, because already, before we have really entered our upcoming transhuman, robotic era, we are seemingly ignoring the advice of two generations of scientists and futurists who have collectively thought about such problems to the tune of millions of hours. Geniuses, visionaries and tech pioneers like Kurzweil, Musk, Hawking, Page & Brin, Asimov, Vinge and many others have warned of the inherent dangers of AI and robotics for decades.

We, as in most of the world』s citizens, do not want an Earth dominated by killer robots. This is the kind of world we want:

Credit: http://actuatorlaboratories.blogspot.com

We want a world where robots and AI work alongside us to make everything better. But if most of the earliest autonomous robots are built with military objectives in mind, we will be setting ourselves up for a dark future.

Isaac Asimov laid out his trademark Three laws of Robotics in 「Runaround」, a 1942 short story. He later added a 「zero」 law that he realized needed to come before the others. They are:

Law 0: A robot may not harm humanity, or, by inaction, allow humanity to come to harm

First law: A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Asimov would apply these laws to nearly all the robots he featured in his following fictional works, and they are considered by most roboticists and computer scientists today to still be extremely valid.

Ever since the idea of real robots, existing alongside humans in society, became a true possibility rather than just the product of science fiction, the idea of roboethics has become a true sub-field in technology, incorporating all of our knowledge of AI and machine learning with law, sociology and philosophy. As the tech and possibilities progressed, many people have added their ideas to the discourse.

As president of EPIC (the Electronic Privacy Information Center), Marc Rotenberg believes that two more laws should be included in Asimov』s list:

Fourth Law: Robots must always reveal their identity and nature as a robot to humans when asked.

Fifth Law: Robots must always reveal their decision-making process to humans when asked.

Credit: Boston Dynamics

It』s evident that the main thrust of most of these tenets is we must do our level best to keep our robot creations from killing us. If we consider them to be the 「children」 of humanity, we certainly have our work cut out for us. As a whole, most parents do a good job at raising kids who end up respecting human life. But a not inconsiderable percentage of the population turns out to be rather bad eggs. Sometimes the cause is bad parents, sometimes it』s bad genes, and other times there is no evident cause.

If we find ourselves living in the Singularity, and our children rapidly exceed any of our abilities to keep tabs on them, we may not be able to rely on any rules we set for them. Their evolution will be out of our control. We could quickly find ourselves in a position similar to where we have placed the lowland gorilla or giant panda.

Because of this, it may be best to instill in them the morals we hope we ourselves would better follow, and hope that our children can police themselves.

Thank you for reading and sharing.

下載開源日報APP:https://openingsource.org/2579/

加入我們:https://openingsource.org/about/join/

關注我們:https://openingsource.org/about/love/