今日推薦開源項目:《CSS 的各種課題 iCSS》

今日推薦英文原文:《Intro to Deep Learning》

今日推薦開源項目:《CSS 的各種課題 iCSS》傳送門:GitHub鏈接

推薦理由:這是個使用 CSS 來完成各種課題,從而發現一些關於 CSS 的新細節的項目。其中有些相對有意思的課題,比如說用 CSS 實現斜線,實現導航欄的切換效果;當然了也有一些關於 CSS 屬性的正兒八百的問題。通過完成一些任務來學習 CSS 的細節是個不錯的選擇,如果有想要深入學習 CSS 的朋友可以考慮一試。

今日推薦英文原文:《Intro to Deep Learning》作者:Anne Bonner

原文鏈接:https://towardsdatascience.com/intro-to-deep-learning-c025efd92535

推薦理由:推薦給深度學習新手的神經網路介紹

Intro to Deep Learning

Photo by ibjennyjenny on Pixabay

We live in a world where, for better and for worse, we are constantly surrounded by deep learning algorithms. From social network filtering to driverless cars to movie recommendations, and from financial fraud detection to drug discovery to medical image processing (…is that bump cancer?), the field of deep learning influences our lives and our decisions every single day.

In fact, you』re probably reading this article right now because a deep learning algorithm thinks you should see it.

Photo by tookapic on Pixabay

If you』re looking for the basics of deep learning, artificial neural networks, convolutional neural networks, (neural networks in general…), backpropagation, gradient descent, and more, you』ve come to the right place. In this series of articles, I』m going to explain what these concepts are as simply and comprehensibly as I can.

If you get into this, there』s an incredible amount of really in-depth information out there! I』ll make sure to provide additional resources along the way for anyone who wants to swim a little deeper into these waters. (For example, you might want to check out Efficient BackProp by Yann LeCun, et al., which is written by one of the most important figures in deep learning. This paper looks specifically at backpropagation, but also discusses some of the most important topics in deep learning, like gradient descent, stochastic learning, batch learning, and so on. It』s all here if you want to take a look!)

For now, let』s jump right in!

Photo by Laurine Bailly on Unsplash

What is deep learning?

Really, it』s just learning from examples. That』s pretty much the deal.At a very basic level, deep learning is a machine learning technique that teaches a computer to filter inputs (observations in the form of images, text, or sound) through layers in order to learn how to predict and classify information.

Deep learning is inspired by the way that the human brain filters information!

Photo by Christopher Campbell on Unsplash

Essentially, deep learning is a part of the machine learning family that』s based on learning data representations (rather than task-specific algorithms). Deep learning is actually closely related to a class of theories about brain development proposed by cognitive neuroscientists in the early 』90s. Just like in the brain (or, more accurately, in the theories and model put together by researchers in the 90s regarding the development of the human neocortex), neural networks use a hierarchy of layered filters in which each layer learns from the previous layer and then passes its output to the next layer.

Deep learning attempts to mimic the activity in layers of neurons in the neocortex.

In the human brain, there are about 100 billion neurons and each neuron is connected to about 100,000 of its neighbors. Essentially, that is what we』re trying to create, but in a way and at a level that works for machines.

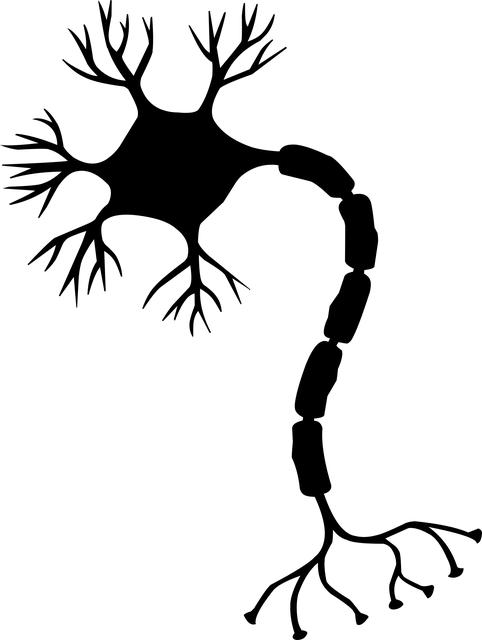

Photo by GDJ on Pixabay

The purpose of deep learning is to mimic how the human brain works in order to create some real magic.

What does this mean in terms of neurons, axons, dendrites, and so on? Well, the neuron has a body, dendrites, and an axon. The signal from one neuron travels down the axon and is transferred to the dendrites of the next neuron. That connection (not an actual physical connection, but a connection nonetheless) where the signal is passed is called a synapse.

Photo by mohamed_hassan on Pixabay

Neurons by themselves are kind of useless, but when you have lots of them, they work together to create some serious magic. That』s the idea behind a deep learning algorithm! You get input from observation, you put your input into one layer that creates an output which in turn becomes the input for the next layer, and so on. This happens over and over until your final output signal!

So the neuron (or node) gets a signal or signals (input values), which pass through the neuron, and that delivers the output signal. Think of the input layer as your senses: the things you see, smell, feel, etc. These are independent variables for one single observation. This information is broken down into numbers and the bits of binary data that a computer can use. (You will need to either standardize or normalize these variables so that they』re within the same range.)

What can our output value be? It can be continuous (for example, price), binary (yes or no), or categorical (cat, dog, moose, hedgehog, sloth, etc.). If it』s categorical you want to remember your output value won』t be just one variable, but several output variables.

Photo by Hanna Listek on Unsplash

Also, keep in mind that your output value will always be related to the same single observation from the input values. If your input values were, for example, an observation of the age, salary, and vehicle of one person, your output value would also relate to the same observation of the same person. This sounds pretty basic, but it』s important to keep in mind.

What about synapses? Each of the synapses gets assigned weights, which are crucial to Artificial Neural Networks (ANNs). Weights are how ANNs learn. By adjusting the weights, the ANN decides to what extent signals get passed along. When you』re training your network, you』re deciding how the weights are adjusted.

What happens inside the neuron? First, all of the values that it』s getting are added up (the weighted sum is calculated). Next, it applies an activation function, which is a function that』s applied to this particular neuron. From that, the neuron understands if it needs to pass along a signal or not.

This is repeated thousands or even hundreds of thousands of times!

Photo by Geralt on Pixabay

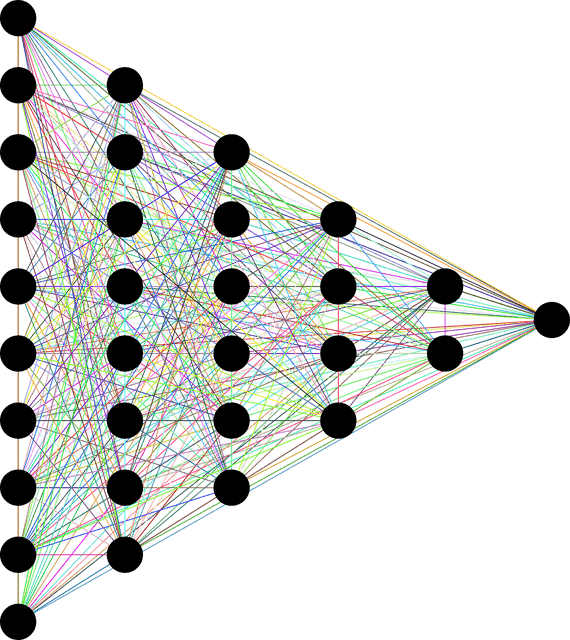

We create an artificial neural net where we have nodes for input values (what we already know/what we want to predict) and output values (our predictions) and in between those, we have a hidden layer (or layers) where the information travels before it hits the output. This is analogous to the way that the information you see through your eyes is filtered into your understanding, rather than being shot straight into your brain.

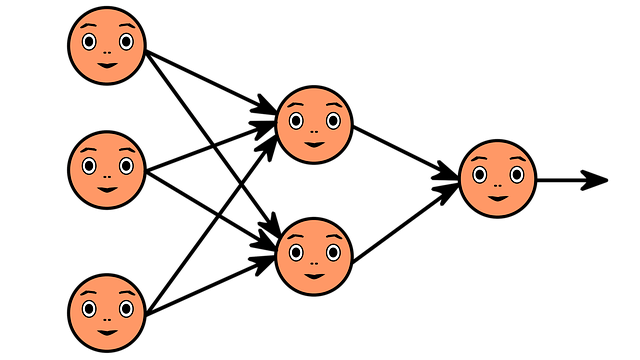

Image by Geralt on Pixabay

Deep learning models can be supervised, semi-supervised, and unsupervised.

Say what?

Supervised learningAre you into psychology? This is essentially the machine version of 「concept learning.」 You know what a concept is (for example an object, idea, event, etc.) based on the belief that each object/idea/event has common features.

The idea here is that you can be shown a set of example objects with their labels and learn to classify objects based on what you have already been shown. You simplify what you』ve learned from what you』ve been shown, condense it in the form of an example, and then you take that simplified version and apply it to future examples. We really just call this 「learning from examples.」

Photo by Gaelle Marcel on Unsplash

(Dress that baby up a little and it looks like this: concept learning refers to the process of inferring a Boolean-valued function from training examples of its input and output.)

In a nutshell, supervised machine learning is the task of learning a function that maps an input to an output based on example input-output pairs. It works with labeled training data made up of training examples. Each example is a pair that』s made up of an input object (usually a vector) and the output value that you want (also called the supervisory signal). Your algorithm supervises the training data and produces an inferred function which can be used to map new examples. Ideally, the algorithm will allow you to classify examples that it hasn』t seen before.

Basically, it looks at stuff with labels and uses what it learns from the labeled stuff to predict the labels of other stuff.

Classification tasks tend to depend on supervised learning. These tasks might include

- Detecting faces, identities, and facial expressions in images

- Identifying objects in images like stop signs, pedestrians, and lane markers

- Classifying text as spam

- Recognizing gestures in videos

- Detecting voices and identifying sentiment in audio recordings

- Identifying speakers

- Transcribing speech-to-text

This one is more like the way you learned from the combination of what your parents explicitly told you as a child (labeled information) combined with what you learned on your own that didn』t have labels, like the flowers and trees that you observed without naming or counting them.

Photo by Robert Collins on Unsplash

Semi-supervised learning does the same kind of thing as supervised learning, but it』s able to make use of both labeled and unlabeled data for training. In semi-supervised learning, you』re often looking at a lot of unlabeled data and a little bit of labeled data. There are a number of researchers out there who have found that this process can provide more accuracy than unsupervised learning but without the time and costs associated with labeled data. (Sometimes labeling data requires a skilled human being to do things like transcribe audio files or analyze 3D images in order to create labels, which can make creating a fully labeled data set pretty unfeasible, especially when you』re working with those massive data sets that deep learning tasks love.)

Semi-supervised learning can be referred to as transductive (inferring correct labels for the given data) or inductive (inferring the correct mapping from X to Y).

In order to do this, deep learning algorithms have to make at least one of the following assumptions:

- Points that are close to each other probably share a label (continuity assumption)

- The data like to form clusters and the points that are clustered together probably share a label (cluster assumption)

- The data lie on a manifold of lower dimension than the input space (manifold assumption). Okay, that』s complicated, but think of it as if you were trying to analyze someone talking — you』d probably want to look at her facial muscles moving her face and her vocal cords making sound and stick to that area, rather than looking in the space of all images and/or all acoustic waves.

Unsupervised learning involves learning the relationships between elements in a data set and classifying the data without the help of labels. There are a lot of algorithmic forms that this can take, but they all have the same goal of mimicking human logic by searching for hidden structures, features, and patterns in order to analyze new data. These algorithms can include clustering, anomaly detection, neural networks, and more.

Clustering is essentially the detection of similarities or anomalies within a data set and is a good example of an unsupervised learning task. Clustering can produce highly accurate search results by comparing documents, images, or sounds for similarities and anomalies. Being able to go through a huge amount of data to cluster 「ducks」 or the perhaps the sound of a voice has many, many potential applications. Being able to detect anomalies and unusual behavior accurately can be extremely beneficial for applications like security and fraud detection.

Photo by Andrew Wulf on Unsplash

Back to it!

Deep learning architectures have been applied to social network filtering, image recognition, financial fraud detection, speech recognition, computer vision, medical image processing, natural language processing, visual art processing, drug discovery and design, toxicology, bioinformatics, customer relationship management, audio recognition, and many, many other fields and concepts. Deep learning models are everywhere!There are, of course, a number of deep learning techniques that exist, like convolutional neural networks, recurrent neural networks, and so on. No one network is better than the others, but some are definitely better suited to specific tasks.

Deep Learning and Artificial Neural Networks

The majority of modern deep learning architectures are based on Artificial Neural Networks (ANNs) and use multiple layers of nonlinear processing units for feature extraction and transformation. Each successive layer uses the output of the previous layer for its input. What they learn forms a hierarchy of concepts where each level learns to transform its input data into a slightly more abstract and composite representation.

Image by ahmedgad on Pixabay

That means that for an image, for example, the input might be a matrix of pixels, then the first layer might encode the edges and compose the pixels, then the next layer might compose an arrangement of edges, then the next layer might encode a nose and eyes, then the next layer might recognize that the image contains a face, and so on. While you may need to do a little fine tuning, the deep learning process learns which features to place in which level on its own!

Photo by Cristian Newman on Unsplash

The 「deep」 in deep learning just refers to the number of layers through which the data is transformed (they have a substantial credit assignment path (CAP), which is the chain of transformations from input to output). For a feedforward neural network, the depth of the CAPs is that of the network and the number of hidden layers plus one (the output layer). For a recurrent neural network, a signal might propagate through a layer more than once, so the CAP depth is potentially unlimited! Most researchers agree that deep learning involves CAP depth >2.

Convolutional Neural Networks

One of the most popular types of neural networks is convolutional neural networks (CNNs). The CNN convolves (not convolutes…) learned features with input data and uses 2D convolutional layers, which means that this type of network is ideal for processing (2D) images. The CNN works by extracting features from images, meaning that the need for manual feature extraction is eliminated. The features are not trained! They』re learned while the network trains on a set of images, which makes deep learning models extremely accurate for computer vision tasks. CNNs learn feature detection through tens or hundreds of hidden layers, with each layer increasing the complexity of the learned features.(Want to learn more? Check out Introduction to Convolutional Neural Networks by Jianxin Wu and Yann LeCun』s original article, Gradient-Based Learning Applied to Document Recognition.)

Recurrent neural networks

While convolutional neural networks are typically used for processing images, recurrent neural networks (RNNs) are used for processing language. RNNs don』t just filter information from one layer into the next, they have built-in feedback loops where the output from one layer might be fed back into the layer preceding it. This actually lends the network a sort of memory.Generative adversarial networks

In generative adversarial networks (GANs), two neural networks fight it out. The generator network tries to create convincing 「fake」 data while the discriminator tries to tell the difference between the fake data and the real stuff. With each training cycle, the generator gets better at creating fake data and the discriminator gets sharper at spotting the fakes. By pitting the two against each other during training, both networks improve. (Basically, shirts vs. skins here. The home team is playing itself to improve its game.) GANs can be used for extremely interesting applications, including generating images from written text. GANs can be tough to work with, but more robust models are constantly being developed.

Deep Learning in the Future

The future is full of potential for anyone interested in deep learning. The most remarkable thing about a neural network is its ability to deal with vast amounts of disparate data. That becomes more and more relevant now that we』re living in an era of advanced smart sensors which can gather an unbelievable amount of data every second of every day. It』s estimated that we are currently generating 2.6 quintillion bytes of data every single day. This is an enormous amount of data. While traditional computers have trouble dealing with and drawing conclusions from so much data, deep learning actually becomes more efficient as the amount of data grows larger. Neural nets are capable of discovering latent structures within vast amounts of unstructured data, like raw media for example, which are the majority of data in the world.The possibilities are endless!

下載開源日報APP:https://openingsource.org/2579/

加入我們:https://openingsource.org/about/join/

關注我們:https://openingsource.org/about/love/