今日推薦開源項目:《成為命名大師 codelf》

今日推薦英文原文:《Would you let an AI Camera in your home?》

今日推薦開源項目:《成為命名大師 codelf》傳送門:GitHub鏈接

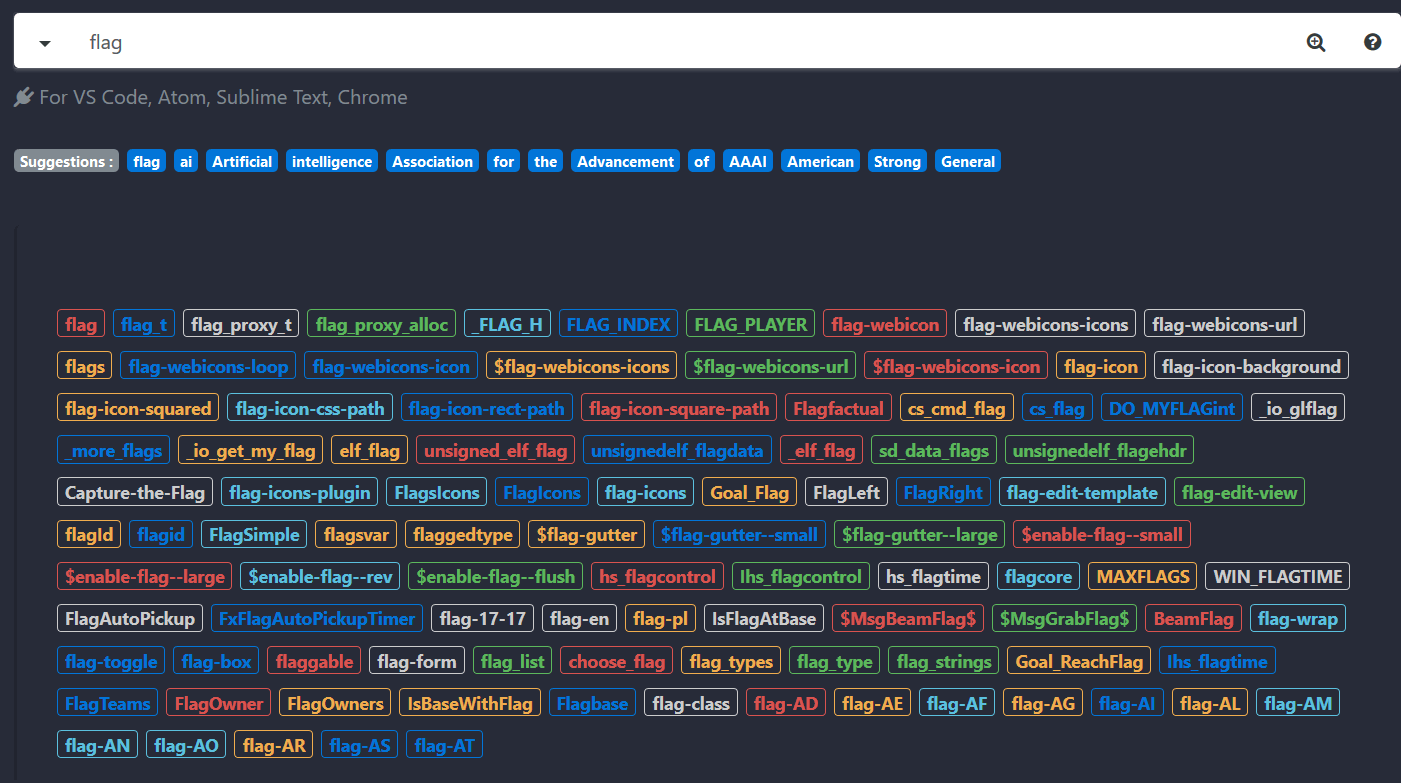

推薦理由:還在為了給變數取名字而煩惱?放下你手上那本嬰兒命名大全吧,它只會給你帶來生命危險。這個項目能夠讓你簡單方便的找到一個合適的變數名字——只需要 CodeLF 一下。它可以作為 VSCode,Atom 或者 Sublime Text 的插件來安裝,或者也可以使用網頁版。如果你想知道它究竟從哪弄來的這麼多變數名,就按一下這些名字對應的 Repo 吧,它們並不是憑空出現的。

今日推薦英文原文:《Would you let an AI Camera in your home?》作者:Alexandre Gonfalonieri

原文鏈接:https://medium.com/swlh/would-you-let-an-ai-camera-in-your-home-1292cd558193

推薦理由:智能相機興許會很管用,但是用它是拍不到聖誕老人的

Would you let an AI Camera in your home?

Are you thinking about buying an AI security camera for your home? It sounds like a good purchase for your home safety.

Cameras can already connect to wi-fi and have been an integral part of our smart devices for years, but they haven』t had 「minds」 of their own.

The world of automated surveillance is booming, with new machine learning techniques giving cameras the ability to spot troubling behavior without human supervision. Indeed, new deep learning techniques have enabled us to analyze video footage more quickly and cheaply than ever before.

This type of A.I. for security is known as 「rule-based」 because a human programmer must set rules for all of the things for which the user wishes to be alerted. This is the most prevalent form of A.I. for security. The hard-drive that houses the program can either be located in the cameras themselves or can be in a separate device that receives the input from the cameras.

This is one of the key driving factors behind the push to bring AI and video surveillance together. The idea is that advanced software could supplement human judgment and provide for more accurate and safe surveillance.

Cameras are really important

From saving lives to keeping us safe

Boston was full of cameras. But none of them was able to detect the threat represented by the intentionally abandoned backpacks, each containing a bomb. The video footage was predominantly analyzed by the eyes of the police department attempting to identify and locate the perpetrators. However, due to both the slow speed and low accuracy of the alternative computer-based image analysis algorithms, we might have waste time.

Automated analytics algorithms are conceptually able, for example, to sift through an abundance of security camera footage in order to pinpoint an object left at a scene and containing an explosive device or other contents of interest to investigators. And after capturing facial features and other details of the person who left the object, analytics algorithms can conceptually also index image databases from social media and private sources in order to rapidly identify the suspect.

How do artificial intelligence and embedded vision processing intersect? Computer vision is a broad, interdisciplinary field that attempts to extract useful information from visual inputs, by analyzing images and other raw sensor data. The term 「embedded vision」 refers to the use of computer vision in embedded systems.

Historically, image analysis techniques have typically only been implemented in complex and expensive, therefore niche, surveillance systems. However, the previously mentioned cost, performance, and power consumption advances are now paving the way for the proliferation of AI home cameras.

Why do we need AI cameras?

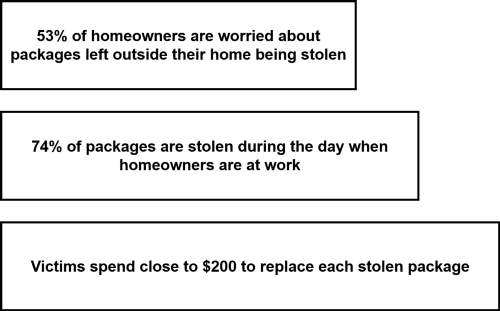

Let me give you an example… As more consumers move towards online shopping and have their items delivered to their doorstep, the risk of having these packages stolen has increased.

It would be great if we can stop worrying about our packages thanks to an AI camera.

Indeed, smart cameras with built-in AI capabilities offer new conveniences, like a phone notification that a child just arrived home safely, or that the dog walker really did walk the dog.

For example, many users opt to have their camera notify them as soon as it recognizes that their kids have arrived home from school. The system』s machine learning capabilities enable it to execute more complex functions, as well, such as sending an alert when an elderly live-in parent doesn』t appear in the kitchen by a certain time of day.

Facial recognition in still images and video is already seeping into the real world. Some companies have created a program where facial recognition is used instead of tickets for events. The venue knows who you are, maybe from a picture you upload or your social media profile, sees your face when you show up and knows if you』re allowed in.

All these things do improve our security, there is no question about this. However, they will also bring new risks to privacy in public and private spaces. Indeed, companies are exploring how smart cameras can be used to gather marketing data.

Limits

As great as they are, we need to be careful…

A Google patent from 2016 described how cameras in a home could use visual clues like books and musical instruments to learn about a person』s interests, and suggest content they might like. However, Google declined to comment…

Many functions envisioned for smart cameras depend on transmitting information they』ve detected, such as faces they』ve identified. We should worry that smart-home enthusiasts may give up data on themselves that they didn』t intend to share. Users of several smart speakers and other smart-home devices show they tend to blindly trust their gadgets won』t betray them, and pay little mind to privacy settings or disclosures, but for how long?

So what』s a privacy-minded, law-abiding citizen to do when surveillance becomes the norm?

Not much. Early research has identified ways to trick facial recognition software, either by specially-made glasses to fool the algorithms or face paint that throws off the AI. But these often require knowledge of how the facial recognition algorithm works.

Another issue would be that some companies are gathering data on customers through special cameras at shops and outlets so the collected information will be analyzed and their sales improved.

Indeed, an increasing number of businesses are setting up permanent cameras separately from security ones to enable recorded facial images to be analyzed with artificial intelligence.

Could such AI security cameras be trained to look for specific types of people, compounding existing biases? Is it easy to hack into the 24-hour video footage taken with these security cameras, or to hack into the AI and change its directive?

Filmed faces are regarded as a sort of citizens』 personal information. The government allows such data to be used for commercial purposes, if it is deleted after captured people』s age and other properties are analyzed, raising concerns among experts over personal privacy.

Behavior analysis is something that a lot of tech companies are researching.

Nobody wants to feel like Big Brother is constantly watching. Several companies already assure people that these systems know when to stop collecting information. For example, a camera in the home can be told to shut down when certain 「intimate」 situationsarise.

My opinion is that the integration of AI technology into advanced camera systems is something that』s inevitable and exciting. But if you happen to purchase one of these cameras, you need to make sure about the privacy element…

下載開源日報APP:https://openingsource.org/2579/

加入我們:https://openingsource.org/about/join/

關注我們:https://openingsource.org/about/love/