每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。交流QQ群:202790710;电报群 https://t.me/OpeningSourceOrg

今日推荐开源项目:《轻量快速的嵌入式数据库 LiteDB》

推荐理由:LiteDB 是一个单数据文件.NET NoSQL的文档存储。它是一个小型的,轻量,快速的NoSQL嵌入式数据库,可以方便的存储和搜索文档。

特点 :

- Serverless NoSQL 文档存储

- 类似于 MongoDB 的简单 API

- 100% C# 代码,支持.NET 3.5 / .NET 4.0 / NETStandard 1.3 / NETStandard 2.0,单 DLL (小于 300kb)

- 支持线程和进程安全

- 支持文档/操作级别的 ACID

- 支持写失败后的数据还原 (日志模式)

- 可使用 DES (AES) 加密算法进行数据文件加密

- 可使用特性或 fluent 映射 API 将你的 POCO类映射为 BsonDocument

- 可存储文件与流数据 (类似 MongoDB 的 GridFS)

- 单数据文件存储 (类似 SQLite)

- 支持基于文档字段索引的快速搜索 (每个集合支持多达16个索引)

- 支持 LINQ 查询

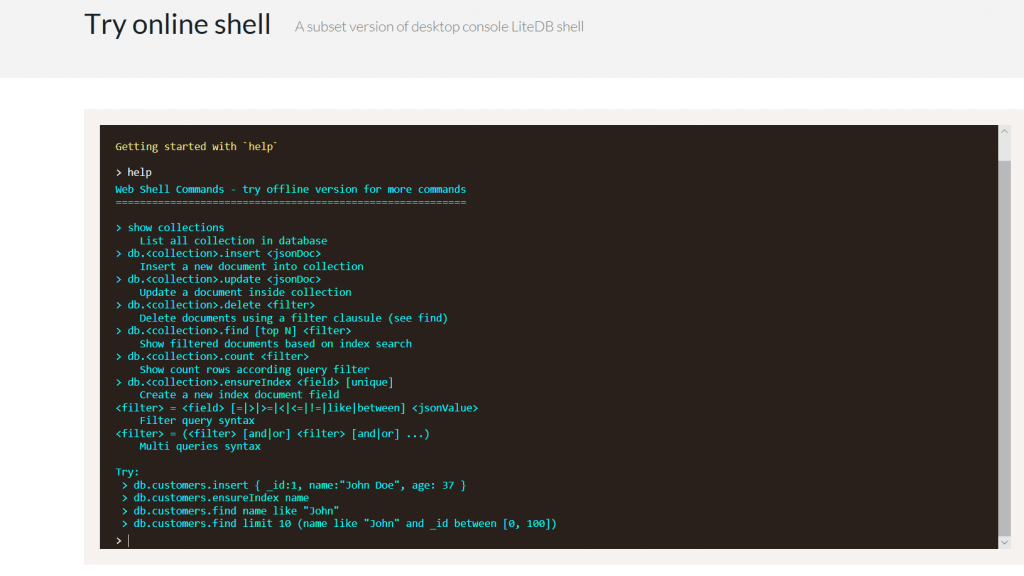

- Shell 命令行

- 与 SQLite对比相当快

- 开源,对所有人免费 – 包括商业应用

- 可以从 NuGet 安装:Install-Package LiteDB

在线测试:

相关资源:

一个GUI(图形用户界面)查看工具 https://github.com/falahati/LiteDBViewer

一个GUI(图形用户界面)编辑工具 https://github.com/JosefNemec/LiteDbExplorer

Lucene.NET目录 https://github.com/sheryever/LiteDBDirectory

支持LINQPad https://github.com/adospace/litedbpad

支持F# https://github.com/Zaid-Ajaj/LiteDB.FSharp

今日推荐英文原文:《Should Software Engineers Care About Ethics?》作者:Mae Capozzi

原文链接:https://digitalculturist.com/should-software-engineers-care-about-ethics-8b1d98a62b66

推荐理由:软件工程师应该关注伦理吗?事实上,软件开发的过程会遇到非常多来自道德和伦理的挑战,你的选择是什么?

Should Software Engineers Care About Ethics?

In his essay, “Design’s Lost Generation,” Mike Monteiro describes how he shocked a crowd of designers at a San Francisco tech conference by suggesting design — like medicine, law, and even driving — should be regulated:

“A year ago I was in the audience at a gathering of designers in San Francisco…at some point in the discussion I raised my hand and suggested…that modern design problems were very complex. And we ought to need a license to solve them.”

In response to the designers’ surprise, Monteiro asked:

“How many of you would go to an unlicensed doctor?” I asked. And the room got very quiet.

“How many of you would go to an unaccredited college?” I asked. And the room got even quieter.

Monteiro understands that software has become too deeply embedded in everything we do for it to remain unregulated. However, companies like Facebook, Uber, and Volkswagen have proven that they do not consider the ethical implications of their products and services by focusing on revenue rather than the safety and security of their users. For example, Facebook has revealed massive amounts of user data to advertisers and companies like Cambridge Analytica without user consent. Uber built software that helped them evade officials in cities where Uber’s services were still illegal. Volkswagen wrote software to cheat emissions tests — tests meant to protect the environment and the people who live in it.

“Software has become too deeply embedded in everything we do for it to remain unregulated.”

We have placed our data and our lives in the hands of people unequipped with a guiding set of ethical principles to dictate how they should approach the consequences of the features they are building. As the last line of defense against corrupt products, software engineers need to develop an ethical framework that prevents us from harming our users and finding ourselves complicit in corruption.

Executives and product managers hold most of the decision-making power when deciding whether a company should build a product or feature. Unfortunately, we’ve become obsessed with shipping features as quickly as possible in a system that values speed over quality. CEOs frequently make decisions based on the promise of securing more funding from venture capitalists, as opposed to assuring the safety and health of their user base.

While some engineers are lucky enough to work for a company that values ethics, most don’t have that experience. I’d wager that many of us will find ourselves in at least one situation where we need to decide between building features that will hurt our users or refusing and potentially losing our jobs in the process. To prevent hurting users, we can study the actions of Uber, Volkswagen, and Facebook to encourage software engineers to evaluate the ethics of the future products they develop.

In September of 2015, the Environmental Protection Agency found that many diesel cars built by Volkswagen sidestepped environmental regulations. The BBC reported that “the EPA has said that the engines had computer software that could sense test scenarios by monitoring speed, engine operation, air pressure and even the position of the steering wheel.” Volkswagen’s software could detect when a vehicle was undergoing testing and lower the diesel emissions during that period, thereby violating the Clean Air act.

The scandal had consequences for the engineers and managers who developed the software. Oliver Schmidt, the former general manager for Volkswagen’s Engineering and Environmental Office, received 7 years in prison for his involvement in the scandal. James Liang was sentenced to 40 months in prison for helping to develop the software that allowed Volkswagen to cheat emissions tests. According to MIT news, the “excess emissions generated by 482,000 affected vehicles sold in the U.S. will cause approximately 60 premature deaths across the U.S.” The software Volkswagen built had fatal consequences, And because engineers did not step up to block the decisions made by the corporation, they were also held accountable for their actions.

Like Volkswagen, Uber also resorted to illegal behavior to gain a leg up on its competition. In March of 2017, The New York Times reported that Uber had written software as early as 2014 called Greyball, which enabled them to operate in cities like Portland, Oregon where the ride-hailing service was not yet legal. When an official trying to crack down on Uber’s illegal activity tried to hail a ride, Greyball spun up a fake instance of its application populated with “ghost” cars. If an Uber driver accepted a ride with one of those officials, the driver would receive a call instructing them to cancel the ride.

After the press caught on, Uber tried to spin Greyball as software designed to protect its drivers from potential threats, not just to evade officials:

This program denies ride requests to users who are violating our terms of service — whether that’s people aiming to physically harm drivers, competitors looking to disrupt our operations, or opponents who collude with officials on secret ‘stings’ meant to entrap drivers.

The statement tries to make it seem like Uber is the victim in this case. It has a tone of snide derision, especially with the word ‘stings’ in quotation marks. In the statement, Uber doesn’t apologize or admit to any wrongdoing, even though the software is used to break the law. Additionally, Uber uses the excuse of protecting drivers to evade the law, even though it’s well-known that Uber does little to protect or support their drivers.

While Facebook’s actions have not directly taken lives, the company has been collecting and sharing private user data for years. In 2018, the story brokethat Cambridge Analytica, a data analytics firm, used a loophole in Facebook’s API to access Facebook data from more than 87 million user accounts. The API not only exposed access to a user’s freely-given data, but also data about a user’s “friends” without their consent. Facebook’s documentation dictated that although accessing user data non-consensually was possible, application developers should not do it.

Cambridge Analytica should not have taken advantage of the loophole in the API, but the brunt of the blame lies with Facebook. Facebook developers exposed personal data not freely given by the user. They enabled the ethical breach that allowed Cambridge Analytica to build a massive marketing database and share that data with political campaigns.

“The time has come for engineers to take responsibility for their creations.”

Vox’s Aja Romano explains how the breach of user trust happened:

The factors that allowed Cambridge Analytica to hijack Facebook user data boiled down to one thing: no one involved in the development and deployment of this technology stopped to consider what the results might look like at scale.

The software engineers who built the API, the product managers who came up with the feature, and the management at Facebook didn’t think about the ethical implications of the software they were building. They didn’t have a framework to turn to and did not know how to determine whether what they were building was ethical, nor did they try to find out.

The time has come for engineers to take responsibility for their creations. We can no longer sustain a myopic view of the products we are creating. We can no longer only focus on writing elegant and well-tested code, or shipping the feature on time. We need to understand the context of what we’re building and to validate that it won’t harm our users.

We carefully select the parts that make up our applications. We might choose a framework that will help us write elegant code and we might carefully write tests to prevent our code from breaking. But what do we see when we step back and evaluate our feature in the context of the larger application? Does it protect and serve its users? Or does it shave years off of people’s lives? Does it negatively influence an election? It doesn’t matter if the parts that make up an application are beautiful. All that matters is whether the product is serving its users in a safe and ethical manner.

We need software engineers to start thinking deeply about the ethical implications of the world they are creating. Computer science majors should take courses in the humanities. Software should be regulated. Engineers should develop a shared set of ethics that they can turn to when they are asked to build something illegal or unethical, like they have been by Uber, Volkswagen, Facebook, and many other companies. Lives depend on it.

Mae Capozzi is an English Major turned software engineer living Brooklyn, NY. Her interests include reading, writing, coding, and playing music. Writing clean, maintainable, and ethical code is important to her.

每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。交流QQ群:202790710;电报群 https://t.me/OpeningSourceOrg