每天推薦一個 GitHub 優質開源項目和一篇精選英文科技或編程文章原文,歡迎關注開源日報。交流QQ群:202790710;電報群 https://t.me/OpeningSourceOrg

今日推薦開源項目:《使用 x64dbg 進行反彙編》

推薦理由:x64dbg是一個調試器,可全功能調試 dll 和 exe 文件。

下載地址:https://github.com/x64dbg/x64dbg/releases

解壓後運行release\x96dbg.exe,根據程序的類型選擇對應的dbg。哪怕不知道該選哪個也沒關係,你可以隨便選一個,然後用它去打開你想要逆向的程序,如果不是對應的程序,會在程序的下方給出提示。

![]()

基本功能:

1、控制軟體運行

調試器的最基本功能就是將一個飛速運行的程序中斷下來,並且使其按照用戶的意願執行。調試器是靠迫使目標程序觸發一個精心構造的異常來完成這些工作的。

2、查看軟體運行中信息

查看軟體的當前信息,這些信息包含但不限於當前線程的寄存器信息,堆棧信息、內存信息、當前 EIP 附近的反彙編信息等。

3、修改軟體執行流程

修改內存信息、反彙編信息、堆棧信息、寄存器信息等等。

組成:

該調試器由三大部分組成:

- DBG

- GUI(這款調試器的圖形界面是基於Qt的)

- Bridge

簡介:

這個軟體與 OD 一樣是開源軟體,熟悉 OD 的一定會發現,這款軟體的界面與 OD 的界面極其的相似,當然也有著與 OD 相同的功能。鑒於 OD 已經好幾年沒有更新,是時候試試這款可以反彙編64位文件的軟體了(ps:軟體本身就有中文版哦)。

它有著更加清晰的顏色區分,並且背後的團隊還在不斷的更新中哦(越來越多的插件)

直接把要調試的文件拖進來!它的操作幾乎與 OD 相同,再加上中文的界面,還是十分友好的。

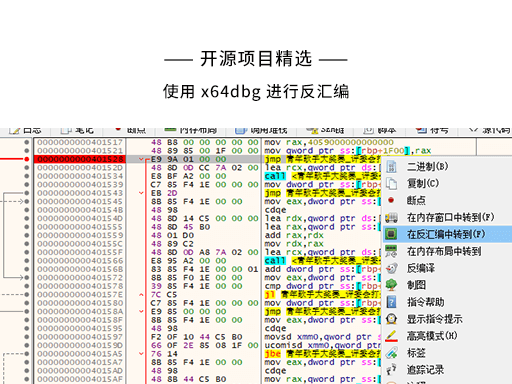

基本操作:

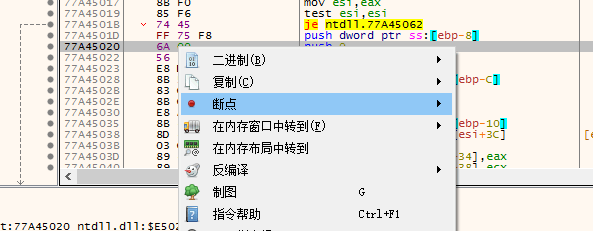

- 下斷點

F2 或者右鍵而且在 x64dbg 里你還可以直接點左邊的小灰點

- 搜索字元串

- 修改完成後就保存吧

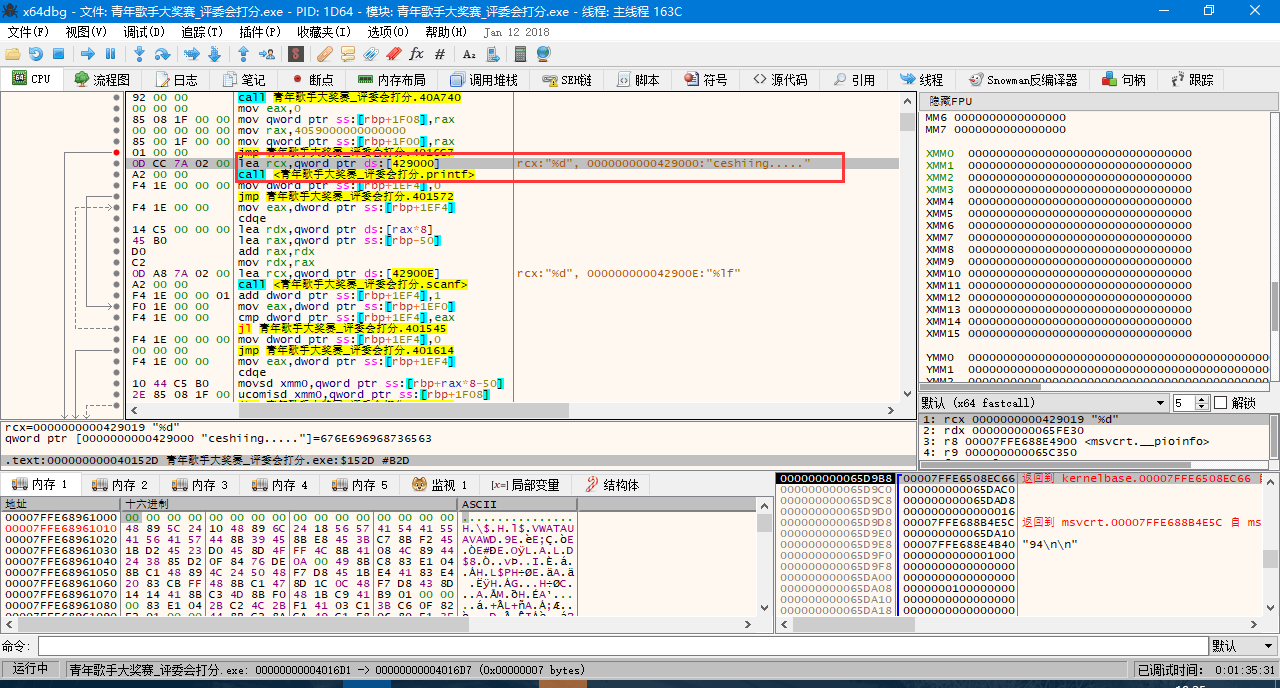

本人對反編譯以及彙編這個方面並不擅長,因此選擇把自己寫的小程序拿來分析,將彙編指令跟源代碼進行比對。以下是測試的結果。

程序的初始化會佔用大量的彙編指令,而這部分是我們平時寫程序時看不到的。

初始化完成後的啟動位置會被注釋為 EntryPoint 。

之後開始腳本正文。

前兩句是初始化數組 array[] 與計數變數 n , i 等,這裡略過。

之後會運行如下代碼:

<while(scanf("%d",&n)!=EOF)

{

……………………

}>

是的,這是一道 ACM 的題,因為很簡單所以拿來分析也方便一點……

對於這個結構,彙編代碼給出的解釋如下:

箭頭指向的地方就是 while() 里的判定,讓我們跟過去。

大家都看見了左邊有個指向上方的灰色箭頭,不用說也知道是指向回剛剛的判斷條件之後的內容。實際上在這裡彙編代碼的邏輯是很清晰的,一層層調用起來,一層層返回,與函數有異曲同工之妙。例如這裡會調用 scanf 函數,在你輸入了之後又會經過重重代碼,最後到達的指令就是 scanf 指令的下一條指令。

將輸入的整型數據處理完畢並進行判斷以後,寄存器回到 while 循環區,並開始執行循環內的第一條代碼。

< printf("ceshiing.....");>

x64dbg 顯示有兩條命令處理這個函數,第一個是獲取顯示內容,第二個是執行顯示,第二個還會調用大量的彙編指令,這裡不多加贅述。

接下來是兩個 for 循環與 if 判斷語句:

< for(i=0;i<n;i++){scanf("%lf",array+i);}

for(i=0;i<n;i++)

{if(array[i]>nmax)nmax=array[i];

if(array[i]<nmin)nmin=array[i];

sum+=array[i];

}>

彙編指令中將這個過程非常直觀地表示了出來。

第一個循環用於輸入每個評委給的分數。 jmp 無條件指令跳轉, jl 按找參數的正負符號跳轉, jl 的前一條 cmp 將兩個值相減,用來給 jl 是否跳轉提供依據。

第二個循環用於尋找最大值與最小值。可以從結構看出, if 語句中滿足條件的話就 不會發生跳轉。

最後執行以下代碼,完成一次大循環:

< sum-=nmax,sum-=nmin;

sum=sum/(n-2);

printf("%.2lf\n",sum);

//初始化數據

nmax=0,nmin=100,sum=0;>

是不是很熟悉?又回到了最開始判定的地方。初始化完成後,就是判定,判定是的話又會返回 while 循環區的第一條代碼……直到循環完成。再後面的彙編代碼都是一些定義了,之前有許多 call 指令的目標便在這裡被定義。包括 printf , scanf 都被封裝在下面。

那麼本次延展的主要內容就到這裡結束了。彙編指令相較高級語言來說要晦澀許多,哪怕是以底層著稱的C語言譯轉為彙編指令以後依舊會變得如此複雜。儘管這樣,彙編指令也富有邏輯性。Call指令使程序變得模塊化,使得C語言中連續的內容不會被分散。簡潔的跳轉指令構成了完善清晰的循環結構。我們可以看出,程序的代碼部分會集中在一個小區域,也就是 EntryPoint 之後的一部分內容,這位我們解析代碼提供了便利。對程序的逆向或破解有興趣的同學可以去看看網上更多的與此相關的內容哦,這篇文章僅供簡介這個程序的解析過程的入門。

最後是我們可愛的開發人員們:

mrexodia

Sigma

tr4ceflow

Dreg

Nukem

Herz3h

Torusrxxx

以及貢獻者們:

blaquee

wk-952

RaMMicHaeL

lovrolu

fileoffset

SmilingWolf

ApertureSecurity

mrgreywater

Dither

zerosum0x0

RadicalRaccoon

fetzerms

muratsu

ForNeVeR

wynick27

Atvaark

Avin

mrfearless

Storm Shadow

shamanas

joesavage

justanotheranonymoususer

gushromp

Forsari0

今日推薦英文原文:《Simple Cloud Hardening》原作者:Kyle Rankin

原文鏈接:https://www.linuxjournal.com/content/simple-cloud-hardening

推薦理由:如果你打算把 Linux 操作系統作為雲端伺服器的話,怎麼可以加固系統防止入侵、保障安全和隱私呢?這篇文章提供了一個簡單可行的操作方法。

Simple Cloud Hardening

Apply a few basic hardening principles to secure your cloud environment.

I've written about simple server-hardening techniques in the past. Those articles were inspired in part by the Linux Hardening in Hostile Networks book I was writing at the time, and the idea was to distill the many different hardening steps you might want to perform on a server into a few simple steps that everyone should do. In this article, I take the same approach only with a specific focus on hardening cloud infrastructure. I'm most familiar with AWS, so my hardening steps are geared toward that platform and use AWS terminology (such as Security Groups and VPC), but as I'm not a fan of vendor lock-in, I try to include steps that are general enough that you should be able to adapt them to other providers.

New Accounts Are (Relatively) Free; Use Them

One of the big advantages with cloud infrastructure is the ability to compartmentalize your infrastructure. If you have a bunch of servers racked in the same rack, it might be difficult, but on cloud infrastructures, you can take advantage of the technology to isolate one customer from another to isolate one of your infrastructure types from the others. Although this doesn't come completely for free (it adds some extra overhead when you set things up), it's worth it for the strong isolation it provides between environments.

One of the first security measures you should put in place is separating each of your environments into its own high-level account. AWS allows you to generate a number of different accounts and connect them to a central billing account. This means you can isolate your development, staging and production environments (plus any others you may create) completely into their own individual accounts that have their own networks, their own credentials and their own roles totally isolated from the others. With each environment separated into its own account, you limit the damage attackers can do if they compromise one infrastructure to just that account. You also make it easier to see how much each environment costs by itself.

In a traditional infrastructure where dev and production are together, it is much easier to create accidental dependencies between those two environments and have a mistake in one affect the other. Splitting environments into separate accounts protects them from each other, and that independence helps you identify any legitimate links that environments need to have with each other. Once you have identified those links, it's much easier to set up firewall rules or other restrictions between those accounts, just like you would if you wanted your infrastructure to talk to a third party.

Lock Down Security Groups

One advantage to cloud infrastructure is that you have a lot tighter control over firewall rules. AWS Security Groups let you define both ingress and egress firewall rules, both with the internet at large and between Security Groups. Since you can assign multiple Security Groups to a host, you have a lot of flexibility in how you define network access between hosts.

My first recommendation is to deny all ingress and egress traffic by default and add specific rules to a Security Group as you need them. This is a fundamental best practice for network security, and it applies to Security Groups as much as to traditional firewalls. This is particularly important if you use the Default security group, as it allows unrestricted internet egress traffic by default, so that should be one of the first things to disable. Although disabling egress traffic to the internet by default can make things a bit trickier to start with, it's still a lot easier than trying to add that kind of restriction after the fact.

You can make things very complicated with Security Groups; however, my recommendation is to try to keep them simple. Give each server role (for instance web, application, database and so on) its own Security Group that applies to each server in that role. This makes it easy to know how your firewall rules are being applied and to which servers they apply. If one server in a particular role needs different network permissions from the others, it's a good sign that it probably should have its own role.

The role-based Security Group model works pretty well but can be inconvenient when you want a firewall rule to apply to all your hosts. For instance, if you use centralized configuration management, you probably want every host to be allowed to talk to it. For rules like this, I take advantage of the Default Security Group and make sure that every host is a member of it. I then use it (in a very limited way) as a central place to define any firewall rules I want to apply to all hosts. One rule I define in particular is to allow egress traffic to any host in the Default Security Group—that way I don't have to write duplicate ingress rules in one group and egress rules in another whenever I want hosts in one Security Group to talk to another.

Use Private Subnets

On cloud infrastructure, you are able to define hosts that have an internet-routable IP and hosts that only have internal IPs. In AWS Virtual Private Cloud (VPC), you define these hosts by setting up a second set of private subnets and spawning hosts within those subnets instead of the default public subnets.

Treat the default public subnet like a DMZ and put hosts there only if they truly need access to the internet. Put all other hosts into the private subnet. With this practice in place, even if hosts in the private subnet were compromised, they couldn't talk directly to the internet even if an attacker wanted them to, which makes it much more difficult to download rootkits or other persistence tools without setting up elaborate tunnels.

These days it seems like just about every service wants unrestricted access to web ports on some other host on the internet, but an advantage to the private subnet approach is that instead of working out egress firewall rules to specific external IPs, you can set up a web proxy service in your DMZ that has more broad internet access and then restrict the hosts in the private subnet by hostname instead of IP. This has an added benefit of giving you a nice auditing trail on the proxy host of all the external hosts your infrastructure is accessing.

Use Account Access Control Lists Minimally

AWS provides a rich set of access control list tools by way of IAM. This lets you set up very precise rules about which AWS resources an account or role can access using a very complicated syntax. While IAM provides you with some pre-defined rules to get you started, it still suffers from the problem all rich access control lists have—the complexity makes it easy to create mistakes that grant people more access than they should have.

My recommendation is to use IAM only as much as is necessary to lock down basic AWS account access (like sysadmin accounts or orchestration tools for instance), and even then, to keep the IAM rules as simple as you can. If you need to restrict access to resources further, use access control at another level to achieve it. Although it may seem like giving somewhat broad IAM permissions to an AWS account isn't as secure as drilling down and embracing the principle of least privilege, in practice, the more complicated your rules, the more likely you will make a mistake.

Conclusion

Cloud environments provide a lot of complex options for security; however, it's more important to set a good baseline of simple security practices that everyone on the team can understand. This article provides a few basic, common-sense practices that should make your cloud environments safer while not making them too complex.

每天推薦一個 GitHub 優質開源項目和一篇精選英文科技或編程文章原文,歡迎關注開源日報。交流QQ群:202790710;電報群 https://t.me/OpeningSourceOrg