每天推薦一個 GitHub 優質開源項目和一篇精選英文科技或編程文章原文,歡迎關注開源日報。交流QQ群:202790710;電報群 https://t.me/OpeningSourceOrg

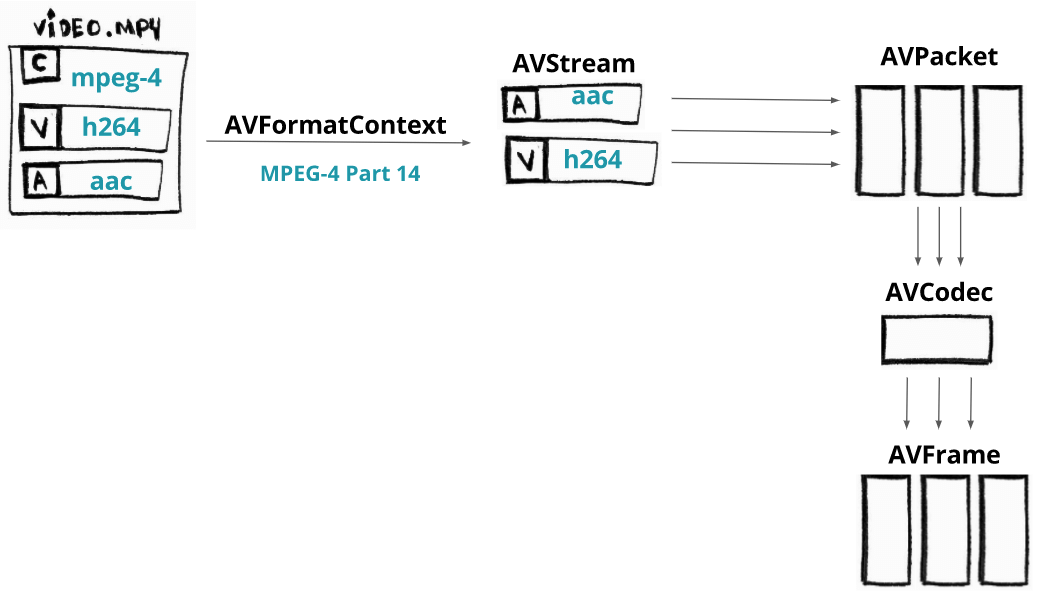

今日推薦開源項目:《一個FFmpeg教程》

推薦理由:FFmpeg 是一個非常優秀的視頻處理開源工具,支持的格式和功能,可以說是非常齊全了。

參數基本格式:

作為一個命令行工具, FFmpeg 的參數按照如下格式輸入:

ffmpeg {1} {2} -i {3} {4} {5}

1global options 全局選項

2input file options 輸入文件設置

3input url 輸入文件位置

4output file options 輸出文件設置

5output url 輸出文件位置

正常使用的話只用記住這個:

ffmpeg -i input.xxx {4} output.xxx

4中內容有很多,可以選擇編碼器,聲音處理,解析度。幀率等等,常用的有這些:

-s 解析度(123×456這麼寫,要無損轉換就填原視頻解析度)

-aspect 視頻長寬比(4:3, 16:9 or 1.3333, 1.7777)

-an 取消音頻

-ac 設置聲道數,1就是單聲道,2就是立體聲,轉換單聲道的TVrip可以用1(節省一半容量),高品質的DVDrip就可以用2

例如:將一個1980×1080的mp4視頻轉化成同大小的avi,即輸入:

ffmpeg命令行工具指令集合:(來自http://blog.csdn.net/maopig/article/details/6610257)

ffmpeg -i input.mp4 -s 1980×1080 output.avi

基本選項:

-formats 輸出所有可用格式

-f fmt 指定格式(音頻或視頻格式)

-i filename 指定輸入文件名,在linux下當然也能指定:0.0(屏幕錄製)或攝像頭

-y 覆蓋已有文件

-t duration 記錄時長為t

-fs limit_size 設置文件大小上限

-ss time_off 從指定的時間(s)開始, [-]hh:mm:ss[.xxx]的格式也支持

-itsoffset time_off 設置時間偏移(s),該選項影響所有後面的輸入文件。該偏移被加到輸入文件的時戳,定義一個正偏移意味著相應的流被延遲了 offset秒。 [-]hh:mm:ss[.xxx]的格式也支持

-title string 標題

-timestamp time 時間戳

-author string 作者

-copyright string 版權信息

-comment string 評論

-album string album名

-v verbose 與log相關的

-target type 設置目標文件類型(「vcd」, 「svcd」, 「dvd」, 「dv」, 「dv50」, 「pal-vcd」, 「ntsc-svcd」, …)

-dframes number 設置要記錄的幀數

視頻選項:

-b 指定比特率(bits/s),似乎FFmpeg是自動VBR的,指定了就大概是平均比特率

-bitexact 使用標準比特率

-vb 指定視頻比特率(bits/s)

-vframes number 設置轉換多少楨(frame)的視頻

-r rate 幀速率(fps) (可以改,確認非標準楨率會導致音畫不同步,所以只能設定為15或者29.97)

-s size 指定解析度 (320×240)

-aspect aspect 設置視頻長寬比(4:3, 16:9 or 1.3333, 1.7777)

-croptop size 設置頂部切除尺寸(in pixels)

-cropbottom size 設置底部切除尺寸(in pixels)

-cropleft size 設置左切除尺寸 (in pixels)

-cropright size 設置右切除尺寸 (in pixels)

-padtop size 設置頂部補齊尺寸(in pixels)

-padbottom size 底補齊(in pixels)

-padleft size 左補齊(in pixels)

-padright size 右補齊(in pixels)

-padcolor color 補齊帶顏色(000000-FFFFFF)

-vn 取消視頻

-vcodec codec 強制使用codec編解碼方式(『copy』 to copy stream)

-sameq 使用同樣視頻質量作為源(VBR)

-pass n 選擇處理遍數(1或者2)。兩遍編碼非常有用。第一遍生成統計信息,第二遍生成精確的請求的碼率

-passlogfile file 選擇兩遍的紀錄文件名為file

-newvideo 在現在的視頻流後面加入新的視頻流

高級視頻選項:

-pix_fmt format set pixel format, 『list』 as argument shows all the pixel formats supported

-intra 僅適用幀內編碼

-qscale q 以<數值>質量為基礎的VBR,取值0.01-255,約小質量越好

-loop_input 設置輸入流的循環數(目前只對圖像有效)

-loop_output 設置輸出視頻的循環數,比如輸出gif時設為0表示無限循環

-g int 設置圖像組大小

-cutoff int 設置截止頻率

-qmin int 設定最小質量,與-qmax(設定最大質量)共用,比如-qmin 10 -qmax 31

-qmax int 設定最大質量

-qdiff int 量化標度間最大偏差 (VBR)

-bf int 使用frames B 幀,支持mpeg1,mpeg2,mpeg4

音頻選項:

-ab 設置比特率(單位:bit/s,也許老版是kb/s)前面-ac設為立體聲時要以一半比特率來設置,比如192kbps的就設成96,轉換 默認比特率都較小,要聽到較高品質聲音的話建議設到160kbps(80)以上。

-aframes number 設置轉換多少楨(frame)的音頻

-aq quality 設置音頻質量 (指定編碼)

-ar rate 設置音頻採樣率 (單位:Hz),PSP只認24000

-ac channels 設置聲道數,1就是單聲道,2就是立體聲,轉換單聲道的TVrip可以用1(節省一半容量),高品質的DVDrip就可以用2

-an 取消音頻

-acodec codec 指定音頻編碼(『copy』 to copy stream)

-vol volume 設置錄製音量大小(默認為256) <百分比> ,某些DVDrip的AC3軌音量極小,轉換時可以用這個提高音量,比如200就是原來的2倍

-newaudio 在現在的音頻流後面加入新的音頻流

字幕選項:

-sn 取消字幕

-scodec codec 設置字幕編碼(『copy』 to copy stream)

-newsubtitle 在當前字幕後新增

-slang code 設置字幕所用的ISO 639編碼(3個字母)

Audio/Video 抓取選項:

-vc channel 設置視頻捕獲通道(只對DV1394)

-tvstd standard 設置電視標準 NTSC PAL(SECAM)

音頻轉換:

要得到一個高畫質音質低容量的MP4的話,首先畫面最好不要用固定比特率,而用VBR參數讓程序自己去判斷,而音質參數可以在原來的基礎上提升一點,聽起來要舒服很多,也不會太大(看情況調整 )

轉換為flv:

ffmpeg -i test.mp3 -ab 56 -ar 22050 -b 500 -r 15 -s 320×240 test.flv

ffmpeg -i test.wmv -ab 56 -ar 22050 -b 500 -r 15 -s 320×240 test.flv

轉換文件格式的同時抓縮微圖:

ffmpeg -i 「test.avi」 -y -f image2 -ss 8 -t 0.001 -s 350×240 『test.jpg』

對已有flv抓圖:

ffmpeg -i 「test.flv」 -y -f image2 -ss 8 -t 0.001 -s 350×240 『test.jpg』

轉換為3gp:

ffmpeg -y -i test.mpeg -bitexact -vcodec h263 -b 128 -r 15 -s 176×144 -acodec aac -ac 2 -ar 22500 -ab 24 -f 3gp test.3gp

ffmpeg -y -i test.mpeg -ac 1 -acodec amr_nb -ar 8000 -s 176×144 -b 128 -r 15 test.3gp

參數解釋:

-y(覆蓋輸出文件,即如果1.***文件已經存在的話,不經提示就覆蓋掉了)

-i 「1.avi」(輸入文件是和ffmpeg在同一目錄下的1.avi文件,可以自己加路徑,改名字)

-title 「Test」(在PSP中顯示的影片的標題)

-vcodec xvid(使用XVID編碼壓縮視頻,不能改的)

-s 368×208(輸出的解析度為368×208,注意片源一定要是16:9的不然會變形)

-r 29.97(幀數,一般就用這個吧)

-b 1500(視頻數據流量,用-b xxxx的指令則使用固定碼率,數字隨便改,1500以上沒效果;還可以用動態碼率如:-qscale 4和-qscale 6,4的質量比6高)

-acodec aac(音頻編碼用AAC)

-ac 2(聲道數1或2)

-ar 24000(聲音的採樣頻率,好像PSP只能支持24000Hz)

-ab 128(音頻數據流量,一般選擇32、64、96、128)

-vol 200(200%的音量,自己改)

-f psp(輸出psp專用格式)

-muxvb 768(好像是給PSP機器識別的碼率,一般選擇384、512和768,我改成1500,PSP就說文件損壞了)

「test.***」(輸出文件名,也可以加路徑改文件名)

今日推薦英文原文:《Introducing TensorFlow.js: Machine Learning in Javascript》作者:Josh Gordon / Sara Robinson

原文鏈接:https://medium.com/tensorflow/introducing-tensorflow-js-machine-learning-in-javascript-bf3eab376db

推薦理由:關注人工智慧的同學想必都知道 TensorFlow,然而不一定知道 TensorFlow.js 吧?這是一個可以使用 JavaScript 在你的瀏覽器運行的人工智慧 API,如果你是一個前端開發者,又對機器學習感興趣,那麼這是一個非常棒的學習的開始。

Introducing TensorFlow.js: Machine Learning in Javascript

We』re excited to introduce TensorFlow.js, an open-source library you can use to define, train, and run machine learning models entirely in the browser, using Javascript and a high-level layers API. If you』re a Javascript developer who』s new to ML, TensorFlow.js is a great way to begin learning. Or, if you』re a ML developer who』s new to Javascript, read on to learn more about new opportunities for in-browser ML. In this post, we』ll give you a quick overview of TensorFlow.js, and getting started resources you can use to try it out.

In-Browser ML

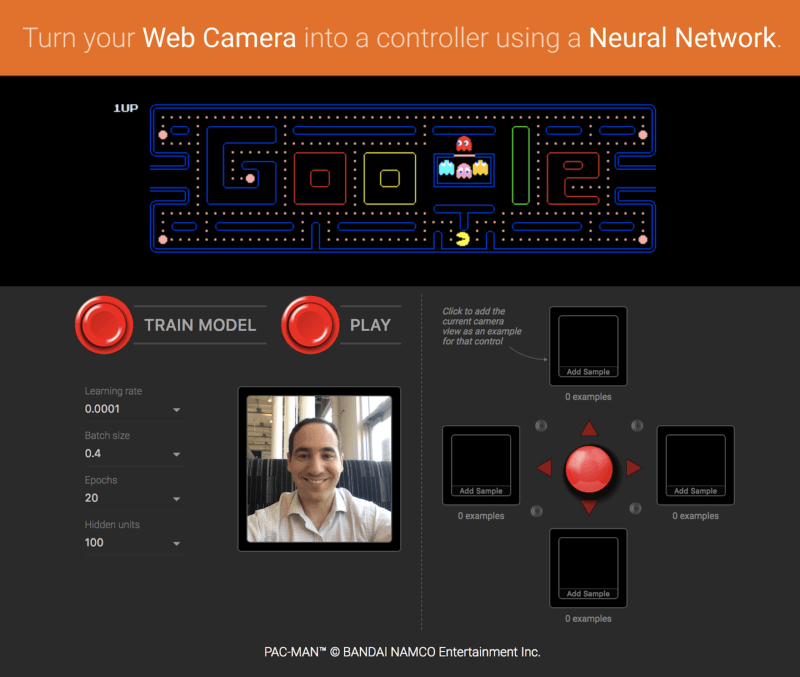

Running machine learning programs entirely client-side in the browser unlocks new opportunities, like interactive ML! If you』re watching the livestream for the TensorFlow Developer Summit, during the TensorFlow.js talk you』ll find a demo where @dsmilkov and @nsthorat train a model to control a PAC-MAN game using computer vision and a webcam, entirely in the browser. You can try it out yourself, too, with the link below — and find the source in the examples folder.

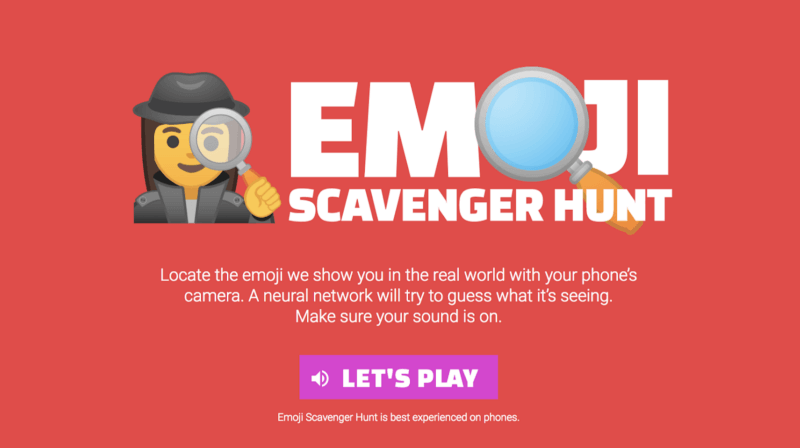

If you』d like to try another game, give the Emoji Scavenger Hunt a whirl — this time, from a browser on your mobile phone.

ML running in the browser means that from a user』s perspective, there』s no need to install any libraries or drivers. Just open a webpage, and your program is ready to run. In addition, it』s ready to run with GPU acceleration. TensorFlow.js automatically supports WebGL, and will accelerate your code behind the scenes when a GPU is available. Users may also open your webpage from a mobile device, in which case your model can take advantage of sensor data, say from a gyroscope or accelerometer. Finally, all data stays on the client, making TensorFlow.js useful for low-latency inference, as well as for privacy preserving applications.

What can you do with TensorFlow.js?

If you』re developing with TensorFlow.js, here are three workflows you can consider.

- You can import an existing, pre-trained model for inference. If you have an existing TensorFlow or Keras model you』ve previously trained offline, you can convert into TensorFlow.js format, and load it into the browser for inference.

- You can re-train an imported model. As in the Pac-Man demo above, you can use transfer learning to augment an existing model trained offline using a small amount of data collected in the browser using a technique called Image Retraining. This is one way to train an accurate model quickly, using only a small amount of data.

- Author models directly in browser. You can also use TensorFlow.js to define, train, and run models entirely in the browser using Javascript and a high-level layers API. If you』re familiar with Keras, the high-level layers API should feel familiar.

Let』s see some code

If you like, you can head directly to the samples or tutorials to get started. These show how-to export a model defined in Python for inference in the browser, as well as how to define and train models entirely in Javascript. As a quick preview, here』s a snippet of code that defines a neural network to classify flowers, much like on the getting started guide on TensorFlow.org. Here, we』ll define a model using a stack of layers.

import * as tf from 『@tensorflow/tfjs』;

const model = tf.sequential();

model.add(tf.layers.dense({inputShape: [4], units: 100}));

model.add(tf.layers.dense({units: 4}));

model.compile({loss: 『categoricalCrossentropy』, optimizer: 『sgd』});

The layers API we』re using here supports all of the Keras layers found in the examples directory (including Dense, CNN, LSTM, and so on). We can then train our model using the same Keras-compatible API with a method call:

await model.fit(

xData, yData, {

batchSize: batchSize,

epochs: epochs

});

The model is now ready to use to make predictions:

// Get measurements for a new flower to generate a prediction // The first argument is the data, and the second is the shape. const inputData = tf.tensor2d([[4.8, 3.0, 1.4, 0.1]], [1, 4]); // Get the highest confidence prediction from our model const result = model.predict(inputData); const winner = irisClasses[result.argMax().dataSync()[0]]; // Display the winner console.log(winner);

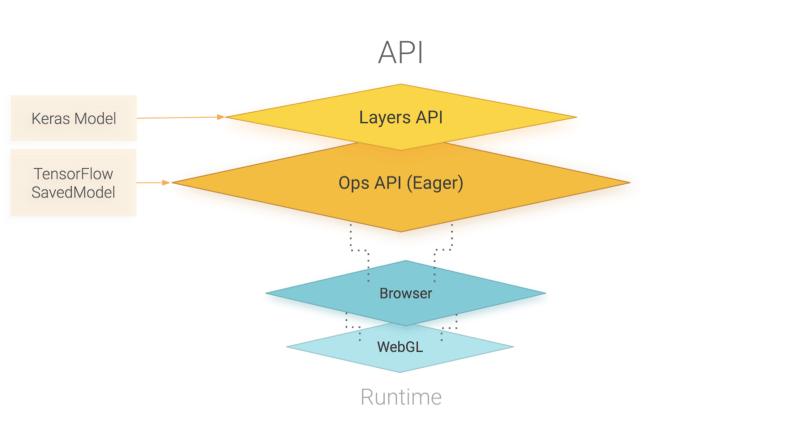

TensorFlow.js also includes a low-level API (previously deeplearn.js) and support for Eager execution. You can learn more about these by watching the talk at the TensorFlow Developer Summit.

An overview of TensorFlow.js APIs. TensorFlow.js is powered by WebGL and provides a high-level layers API for defining models, and a low-level API for linear algebra and automatic differentiation. TensorFlow.js supports importing TensorFlow SavedModels and Keras models.

How does TensorFlow.js relate to deeplearn.js?

Good question! TensorFlow.js, an ecosystem of JavaScript tools for machine learning, is the successor to deeplearn.js which is now called TensorFlow.js Core. TensorFlow.js also includes a Layers API, which is a higher level library for building machine learning models that uses Core, as well as tools for automatically porting TensorFlow SavedModels and Keras hdf5 models. For answers to more questions like this, check out the FAQ.

每天推薦一個 GitHub 優質開源項目和一篇精選英文科技或編程文章原文,歡迎關注開源日報。交流QQ群:202790710;電報群 https://t.me/OpeningSourceOrg