每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。

开源日报的第0期推出已经有一段时间啦,今天我们终于正式推出第1期开源日报,敬请关注。

今日推荐开源项目:《GIF制作工具Gifski》;GitHub 地址:https://github.com/NickeManarin/ScreenToGif

推荐理由:Gifski这个开源程序可以将一系列图片或一段视频转化为高质量的gif,转换不难,难得的是保持着高质量,这个项目也是入围了 GitHub Trending 增长最快的repo排行榜。

使用

Gifski实际上适用于windows,mac以及linux三个平台,唯一不同的是,mac平台上的gifski内置了视频分帧工具,因此可以直接把视频拖入程序窗口即可生成gif,而其它平台上则只能使用第三方程序分帧后才能处理,并且要在命令行中运行。

(在这里插入一张图片,太大自行尝试上传文件:官方实例图片.gif)

不过这并不是什么大问题,还记得开源工场之前的延展ffmpeg吗

https://openingsource.org/553/?这款命令行程序就是完美的分帧工具。下面笔者将以一段视频为例,演示这两个工具的使用教程。

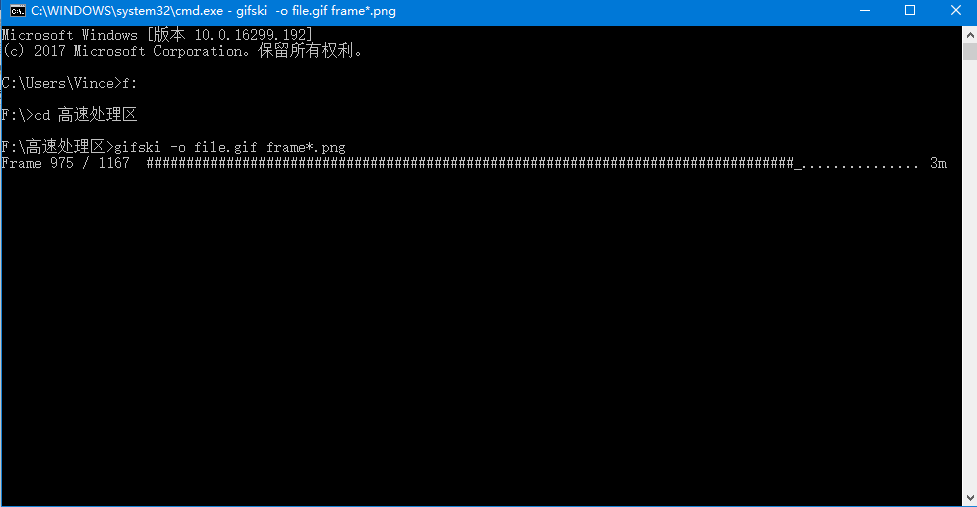

环境:win10

视频源:祖娅纳惜-逆浪春秋,该视频FPS30,更能体现这个工具的性能。

如果你是刚接触命令行工具,请按照以下步骤进行:

将ffmpeg,gifski,准备转换的视频放在同一个文件夹。

按Win+R,输入cmd,打开命令提示行。

输入 X:,X是你的文件夹所在盘符。

输入 cd xxxx/xxxx 即文件夹所在目录。不需要输入盘符。

此时就可以开始调用这两个工具了。

运行cmd,调用ffmpeg,开始分帧:

< ffmpeg -i video.mp4 frame%04d.png>

此处%04d表示会从0001开始计数。如果视频较长可以调大,但不建议,因为太多会极大影响GIF的生成时间,实际上,1000帧就已经要花去十几分钟来生成了。

处理完成后,删掉你不需要的帧。

接下来就要把这些图片整合到一起,成为一张GIF。

运行cmd,调用gifski:

<gifski -o file.gif frame*.png>

友情提示,本机处理1280×720大小的gif的处理速度大概是0.7秒/帧,制作GIF的时候请注意时间。另外这里使用的是默认参数:帧率20。

由于帧率是20较原视频小,因此显得较慢。

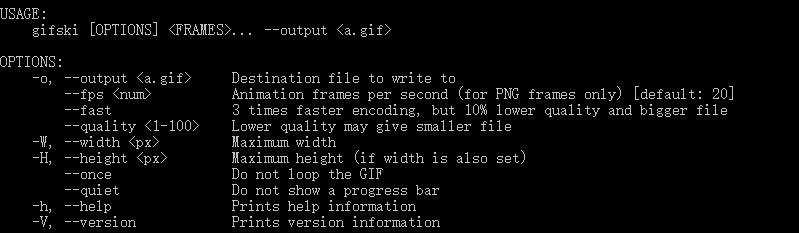

程序提供的参数:

这里我们尝试使用30帧速率再次制作,并且取名为h_sped.gif:

gifski -o h_sped.gif –fps 30 frame*.png

效果如下:

(由于gif文件过大,此处仅提供文字描述)

可以发现,到后面会有较为明显的掉帧现象,这有可能是程序的问题,更有可能是电脑性能所限。实际使用中,不建议使用这么大的图片以及这么长的片段,毕竟用户要看这么大的GIF也是得颇费周章。

总体来说gifski是个强大的高质量gif工具,但功能较为单一,且需要先依靠ffmpeg分割为png,操作略显繁琐,而且大小无法进行选取。所以相比之下,如若在windows下则并不推荐使用gifski。可以尝试下面推荐的软件。

而在Linux下ubantu自带的ImageMagick也几乎可以得到完全相同的效果(速度较快,质量高),操作也十分相近,可以说不相上下,同时这两款也都十分依靠ffmpeg。Mac平台下不清楚其他软件的特性,反正gifski同样适用。

实际上,在比较的时候,笔者还发现了一个神奇的集大成者:ScreentoGIF。

它把ffmpeg与gifski整合到了一起,还支持录屏功能,尽管是阉割版,不能支持所有的视频格式,但是也已经非常强力了,还可以手动选择要转化的帧,最关键的是,这是一个窗口程序而不是命令行程序!不懂命令行或者厌烦命令行操作的小伙伴们可有福了,之前的命令行教程权当学习一下吧……(别打死我2333)

今日推荐英文原文:来自 Google 的《Machine learning meets culture》

推荐理由:机器学习是时下时髦热闹的话题,繁华背后有着怎样的人文境遇和思考,当机器学习遇到文化,交汇在这十字路口,又有怎样奇妙的反应呢?不管是人类还是机器,都会遇到,也迟早会思索这个问题。现在,一群科学家们已经在用机器学习来处理艺术品了。

Machine learning meets culture

Whether helping physicians identify disease or finding photos of “hugs,” AI is behind a lot of the work we do at Google. And at our Arts & Culture Lab in Paris, we’ve been experimenting with how AI can be used for the benefit of culture. Today, we’re sharing our latest experiments—prototypes that build on seven years of work in partnership the 1,500 cultural institutions around the world. Each of these experimental applications runs AI algorithms in the background to let you unearth cultural connections hidden in archives—and even find artworks that match your home decor.

Art Palette

From interior design to fashion, color plays a fundamental role in expression, communicating personality, mood and emotion. Art Palette lets you choose a color palette, and using a combination of computer vision algorithms, it matches artworks from cultural institutions from around the world with your selected hues. See how Van Gogh's Irises share a connection of color with a 16th century Iranian folio and Monet’s water lilies. You can also snap a photo of your outfit today or your home decor and can click through to learn about the history behind the artworks that match your colors.

Watch how legendary fashion designer, Sir Paul Smith uses Art Palette:

Giving historic photos a new lease on LIFE

Beginning in 1936, LIFE Magazine captured some of the most iconic moments of the 20th century. In its 70-year-run, millions of photos were shot for the magazine, but only 5 percent of them were published at the time. 4 million of those photos are now available for anyone to look through. But with an archive that stretches 6,000 feet (about 1,800 meters) across three warehouses, where would you start exploring? The experiment LIFE Tags uses Google’s computer vision algorithm to scan, analyze and tag all the photos from the magazine’s archives, from the A-line dress to the zeppelin. Using thousands of automatically created labels, the tool turns this unparalleled record of recent history and culture into an interactive web of visuals everyone can explore. So whether you’re looking for astronauts, an Afghan Hound or babies making funny faces, you can navigate the LIFE Magazine picture archive and find them with the press of a button.

Identifying MoMA artworks through machine learning

Starting with their first exhibition in 1929, The Museum of Modern Art in New York took photos of their exhibitions. While the photos documented important chapters of modern art, they lacked information about the works in them. To identify the art in the photos, one would have had to comb through 30,000 photos—a task that would take months even for the trained eye. The tool built in collaboration with MoMA did the work of automatically identifying artworks—27,000 of them—and helped turn this repository of photos into an interactive archive of MoMA’s exhibitions.

We unveiled our first set of experiments that used AI to aid cultural discoveries in 2016. Since then we’ve collaborated with institutions and artists, including stage designer Es Devlin, who created an installation for the Serpentine Galleries in London that uses machine learning to generate poetry. We hope these experimental applications will not only lead you to explore something new, but also shape our conversations around the future of technology, its potential as an aid for discovery and creativity.

You can try all our experiments at g.co/artsexperiments or through the free Google Arts & Culture app for iOS and Android.

每天推荐一个 GitHub 优质开源项目和一篇精选英文科技或编程文章原文,欢迎关注开源日报。交流QQ群:202790710